Most software and systems generate audit logs.

They are a means to examine what activities have occurred on the system and are typically used for diagnostic performance and error correction. System Administrators, network engineers, developers, and help desk personnel all use this data to aid them in their jobs and maintain system stability. Audit logs have also taken on new importance for cybersecurity and are often the basis of forensic analysis, security analysis, and criminal prosecution.

Similar to other types of log data, when incorrectly configured, compromised, or corrupted, audit logs are useless. Because of their growing importance, and to extract the most value from them, we’ve put together some useful information on the basics of audit logging.

What are audit logs?

Let’s start with the basics — what exactly are audit logs?

Audit logs vary between applications, devices, systems, and operating systems but are similar in that they capture events which can show “who” did “what” activity and “how” the system behaved. An administrator or developer will want to examine all types of log files to get a complete picture of normal and abnormal events on their network. A log file event will indicate what action was attempted and if it was successful. This is critical to check during routine activities like updates and patching, and also to determine when a system component is failing or incorrectly configured.

For the sake of space and time, we will examine primarily operating system logs, but you’d do well to examine all systems in your environment to get a good understanding of the logs, log configurations, file formats, and event types that you can gather.

Here are common Linux log file names and a short description of their usage:

- /var/log/messages : General message and system messages

- /var/log/auth.log : Authentication logs

- /var/log/kern.log : Kernel logs

- /var/log/cron.log : Crond logs (cron job)

- /var/log/maillog : Mail server logs

- /var/log/qmail/ : Qmail log directory (more files inside this directory)

- /var/log/httpd/ : Apache access and error logs directory

- /var/log/lighttpd/ : Lighttpd access and error logs directory

- /var/log/boot.log : System boot log

- /var/log/mysqld.log : MySQL database server log file

- /var/log/secure or /var/log/auth.log : Authentication log

- /var/log/utmp, /var/log/btmp or /var/log/wtmp : Login records file

- /var/log/yum.log : Yum command log file

For example, in terms of security analysis you may want to examine user session (login) interaction on a Linux system. Linux session information is stored in different *tmp files.

To display the contents of /var/run/utmp, run the following command:

utmpdump /var/run/utmp

![display contents]()

Do the same with /var/log/wtmp:

utmpdump /var/log/wtmp

![display contents 2]()

And finally with /var/log/btmp:

utmpdump /var/log/btmp

![display contents 3]()

The output format in these three cases is similar. Note that the event records in the utmp and btmp are arranged chronologically, while in the wtmp, the order is reversed.

With Microsoft Windows, event management is typically done with the Event viewer application, rather than the command prompt. The screenshots below illustrate the Microsoft Event Viewer interface that allows you to examine logs used for Security, Administration, System, and Setup activities. (with Server 2008/Vista and up, the logs are stored in the %SystemRoot%\system32\winevt\logs directory.)

![overview and summary]()

A similar Windows login/session audit log event might look like this:

![windows]()

Some important points to keep in mind:

- While event logs vary in readability, these types of files gather a lot of potentially sensitive information and should not be made publicly available.

- Many native log files systems should be configured to ensure security and continuity. Examine these audit log settings to ensure log files are secured and are tuned to your operation needs. This can include changing the sizing of the log files, changing the location of the log files, and adjusting the specific events that are captured in the file.

- Most native log file systems do not automatically alert the administrator/end user when critical events occur.

- Because event logs from any operating system can provide detailed information about who, what, and when an event occurred, correlating this information can provide a “story” of activity that has led to performance, security, and application errors.

- Learning to “read” audit events logs is a skill that can take time, especially if your team needs to learn multiple log system record formats and tools. Challenges in understanding logs can discourage audit log reviews and reduce the value of the logs.

Why audit logging?

Now that we have a better understanding of what audit logs are, let’s review some of the core benefits of collecting this data from your environment, whether it’s a data center, server/workstation, or even application logs.

Promote accountability

Event logs should be configured to help an organization capture security and authentication, and privileged activity information. This should be in support of company policy or best practices to ensure that systems remain stable and users are held accountable for their actions, which are tracked by event logs.

For example, audit logs can be used in tandem with access controls to identify and provide information about users suspected of improper modification of access privileges or data. To do this effectively, event logs have to be captured and stored regularly and securely to show behavior before and after an event has occurred.

Reconstruction of events

Event logs may also be used to essentially “replay” events in sequence to help understand how a damaging event has occurred. Analysis can distinguish between system, application, or operator errors. By gaining knowledge of system conditions prior to the time of an error is a way to prevent future failures. Additionally, if logs are configured to capture detailed transactions, data can sometimes be reconstructed from logs.

Security and forensics

Because event logs work in concert with logical access controls, actions taken are pinpointed to specific users and devices. This information can be used to see when a user account may have been hacked, and then if user account privileges were escalated to access specific files or directories with sensitive information. Logs could also show who and when specific files were copied, printed, or deleted.

Audit logging requirements

From the information above, it is fairly clear that audit logging is systems based. There are audit logging systems on network devices and within applications and operating systems. Within logging services on stand-alone systems, there can be further log subtypes for gathering specific types of events, like security events, system events, and specific services.

Modern web-oriented systems are based on auto-scaling components and have blurred the lines between traditional “servers” and the applications that run on them. Audit logging now involves collecting data from a large amount of data sources, which poses a series of challenges necessitating a log management solution — data collection, storage, protection, parsing of the data and its subsequent analysis.

When looking for a solution, some of the key considerations are:

- Normalization/parsing – to enable efficient analysis of the data, your solution needs to support the normalization of data. Parsing the different audit logs into structured fields allows for easier reading, searching, and analysis.

- Alerting – getting notified when a user has performed an unauthorized action, for example, is a crucial element of audit logging and facilitates a more proactive approach.

- Security – safe routing and storage of the different audit logs to a secure location. Make sure the solution stores the logs in a secure manner that addresses company retention policy. Some products provide data compression and other means to address high volume logs.

- Correlation – to be able to effectively connect the dots and identify a sequence of events, the ability to create correlation rules is also important.

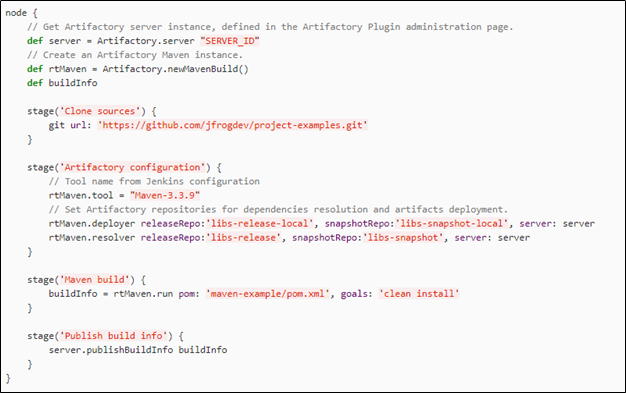

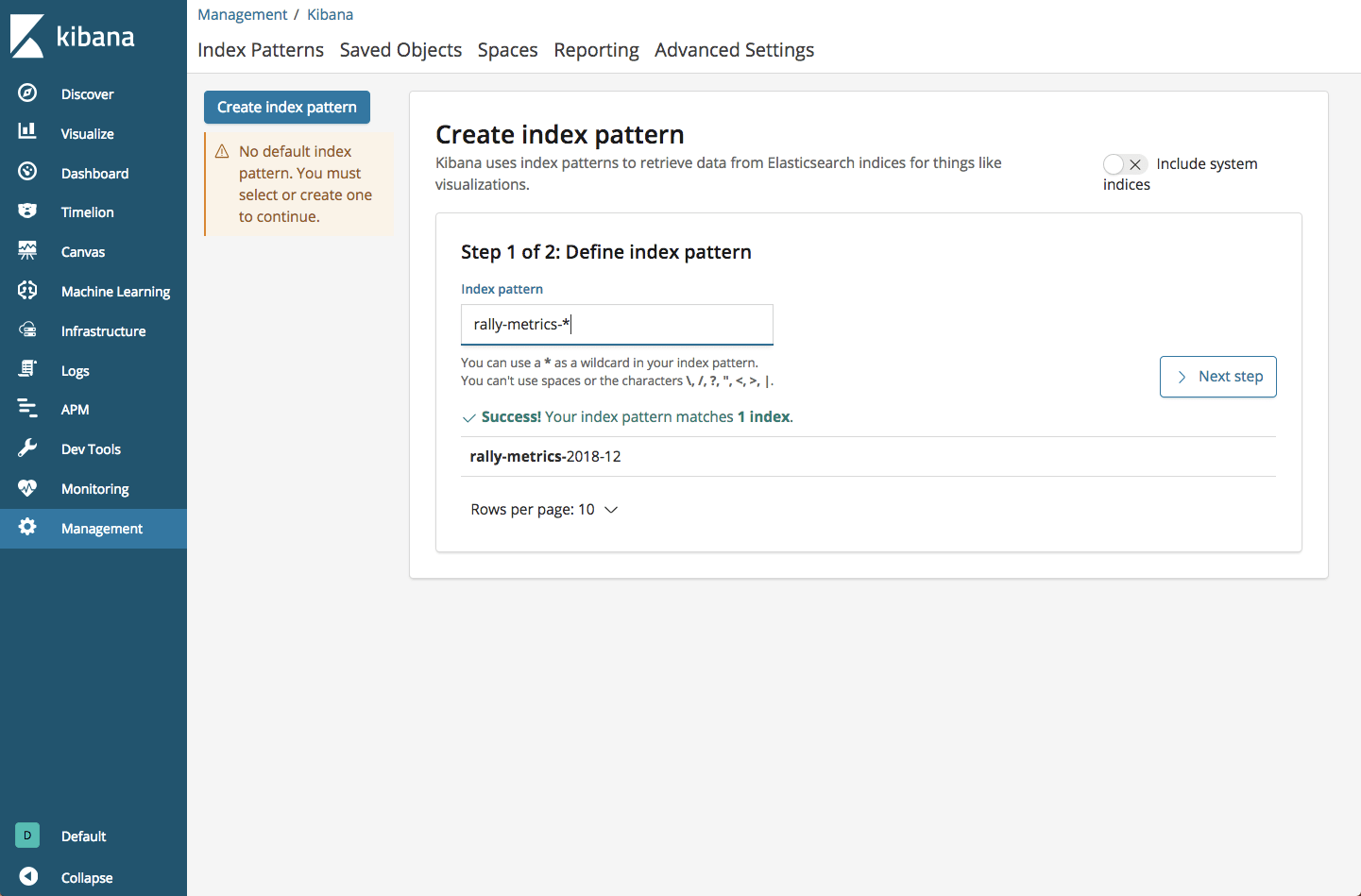

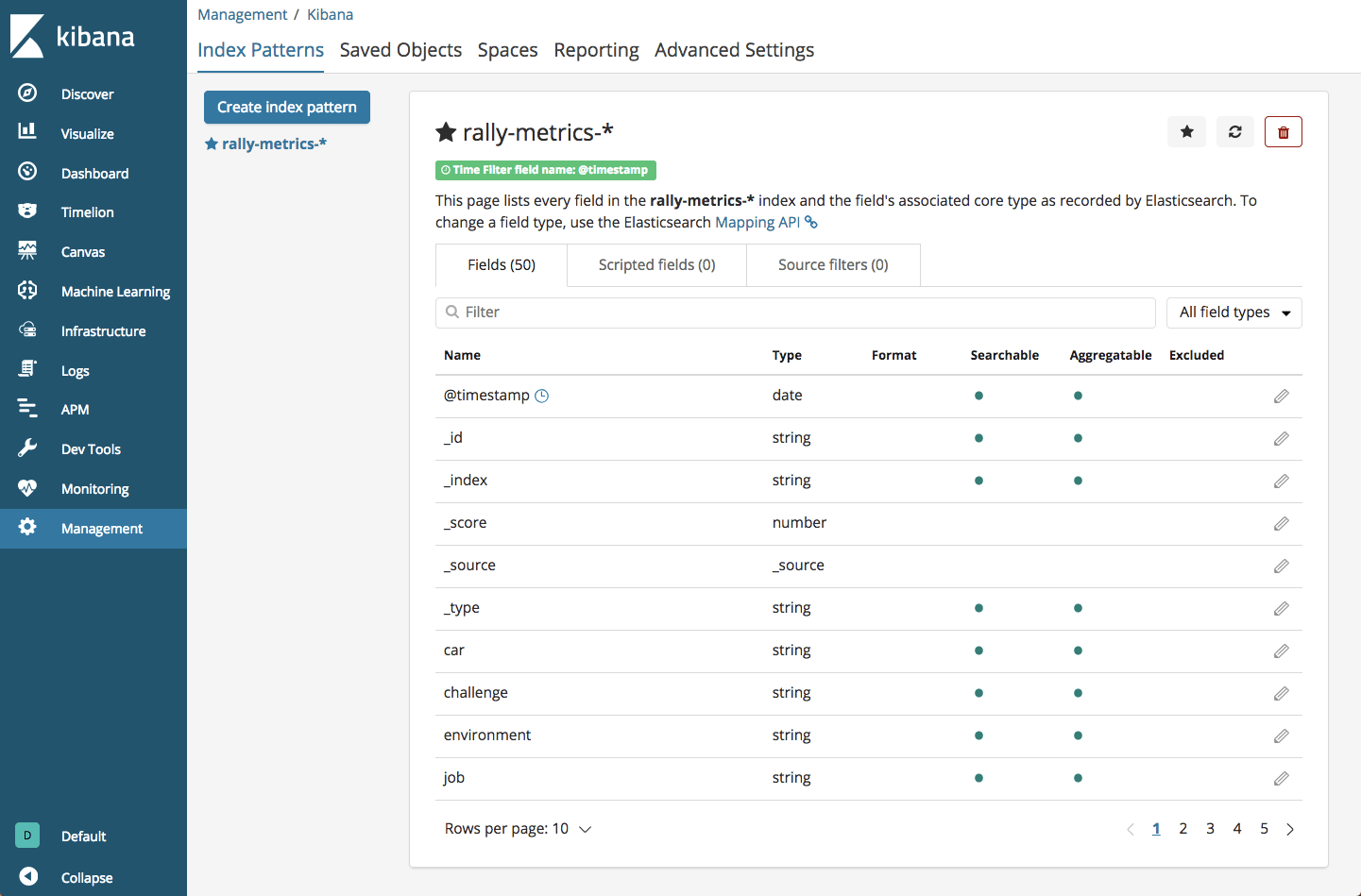

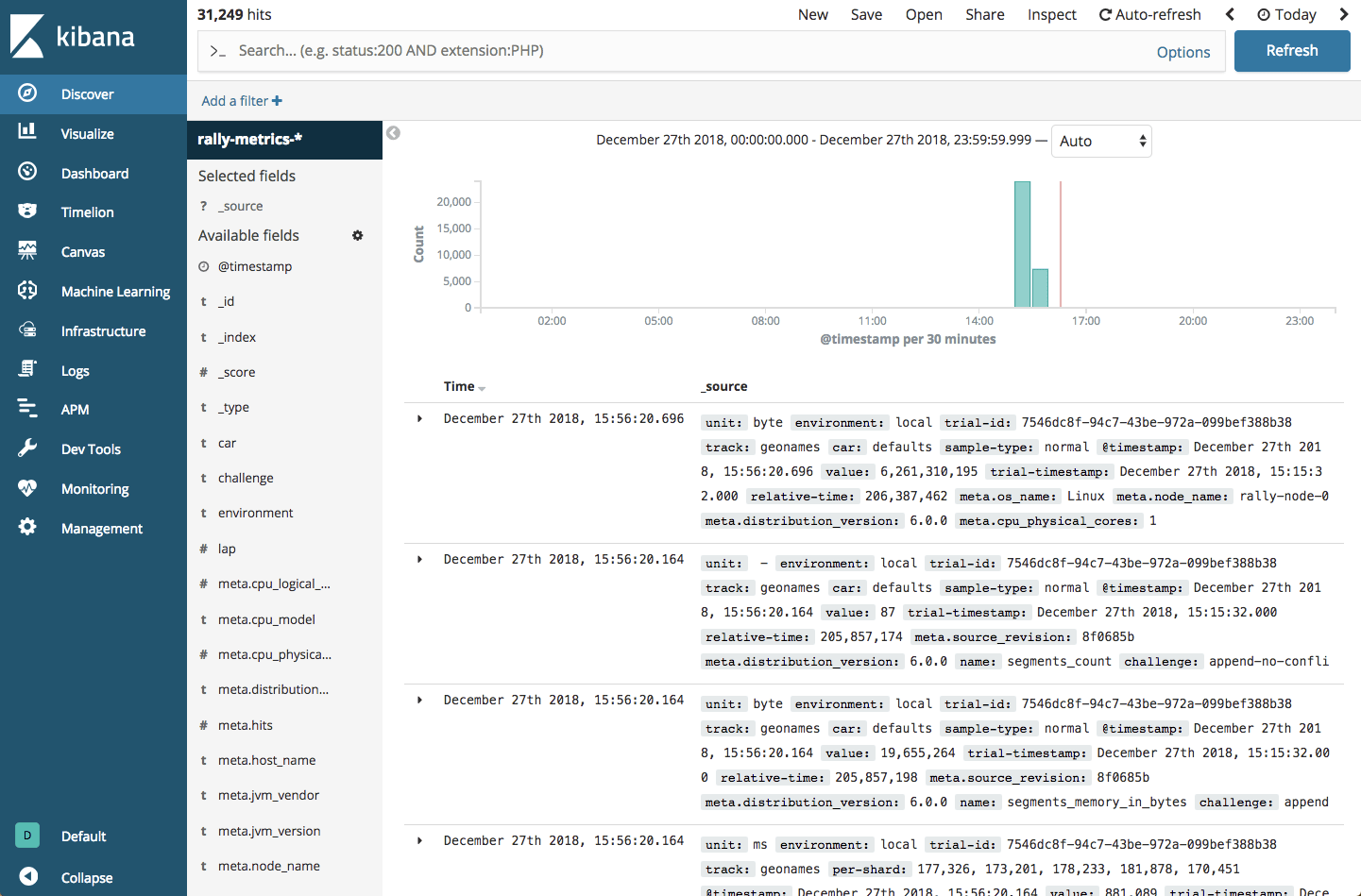

There is a wide array of solutions available in the market that support audit logging and centralized logging as a whole. ELK (Elasticsearch, Logstash and Kibana) is the most common open source solution used, while SIEM systems are more tailored for a security use case.

Using audit logging for security and compliance

Simply put, without audit logging, any action by a malicious actor on a system can go totally unnoticed.

Needless to say, this is a significant risk when trying to protect your environment or recover sensitive information for operations. Yes – audit logs are valuable for detecting and analyzing production issues, but they can also provide the underpinning for a security system.

Security compliance programs and certifications reflect industry best practices and focus on high risk, and it is not a coincidence that they include audit logging as an ingredient for compliance.

Below is a list of compliance programs with reference to audit logging components:

![chart]()

Audit logging best practices

The following are recommendations for system settings and configurations that can help you use audit logs for security and compliance.

Log system configuration

Logs are composed of event entries, which capture information related to a specific event that has occurred.

Log format will vary between sources, platforms, or application, but each recorded event should capture at a minimum the following:

- General information:

- Timestamp

- Event, status, and/or error codes

- Service/command/application name

- User or system account associated with an event

- Device used (e.g. source and destination IPs, terminal session ID, web browser, etc.)

- Operating System (OS) Events

o Start-up and shut-down of the system

o Start-up and shut-down of a service

o Network connection changes or failures

o Changes to, or attempts to change, system security settings and controls

o Log-on attempts (successful or unsuccessful)

o The function(s) performed after logging on (e.g., reading or updating a critical file, software installation)

o Account changes (e.g., account creation and deletion, account privilege assignment)

o Successful/failed use of privileged accounts

- Application account information

o Successful and failed application authentication attempts

o Application account changes (e.g., account creation and deletion, account privilege assignment)

o Use of application privileges

o Application startup and shutdown

o Application failures

o Major application configuration changes

o Application transactions, for example,

– e-mail servers recording the sender, recipients, subject name, and attachment names for each e-mail

– Web servers recording each URL requested and the type of response provided by the server

– business applications recording which financial records were accessed by each user

Sync the timestamp

Without logs using a common format for the timestamp field, typical correlation between logs and sequential analysis would be almost impossible. It is a compliance requirement for a number of standards that the NTP (time protocol) be synchronized for all devices, servers, applications. This configuration is typically applied globally within an enterprise with a backup source should the primary fail. If you are using a log aggregator/processor such as Logstash, you can make sure the timestamp is applied across all the audit logs as they are processed.

Log file security

Audit logs are also a prime target for attackers who are looking to cover evidence of their activities and to maximize opportunities to compromise data. To prevent malicious actors from hiding their activities, administrators must configure audit logging to enforce strong access control around audit logs and limit the number of user accounts that can modify audit log files.

Finally, if audit logs are transmitted for remote collection or archive/backup, administrators should ensure the transmission is secure providing encryption in transmission and encryption for backups. This will improve the chances that the logs will be usable, if necessary, for forensic analysis of events.

Endnotes

Audit logging necessitates understanding the architecture of your system and the different components comprising it. Understanding how the different building blocks communicate with each other and how they rely on each other is part of understanding how to finetune and protect your system. With the advent of cloud computing, virtualized resources, and devices, modern systems rely on audit logging tools to address a new complexity of audit events.

Security and compliance requirements for audit logs add additional configuration and operational considerations — such as protection of the log data to enhance the integrity, availability, and confidentiality of records. The benefit of a log management platform such as Logz.io is providing a centralized solution for log aggregation, processing, storage and analysis to help organizations improve audit log management.

Learn more about Logz.io's secure and compliant ELK solution!