A short guide to Kibana searches.

Demystifying Kibana Searches

Infrastructure Monitoring with Topbeat and the ELK Stack

In a previous post, we described how to use Packetbeat to analyze networks by monitoring metrics on web, database, and other network protocols. Another member of Elastic’s “Beats” family is Topbeat — a shipper that monitors system data and processes.

Topbeat collects data on CPU usage, memory, process statistics, and other system-related metrics that when shipped into the ELK Stack for indexing and analysis, can be used for real-time monitoring of your infrastructure.

In this post, we will describe how to monitor a basic infrastructure setup that consists of a single server (in this case, deployed on AWS) using Topbeat and the Logz.io ELK Stack. We will begin by configuring the pipeline from Topbeat into the ELK Stack and then show how to analyze and visualize the data.

Setting Up Topbeat

Our first step is to install and configure Topbeat (the full installation instructions are here):

$ curl -L -O https://download.elastic.co/beats/topbeat/topbeat_1.2.3_amd64.deb $ sudo dpkg -i topbeat_1.2.3_amd64.deb

Open the configuration file at /etc/topbeat/topbeat.yml:

$ sudo vim /etc/topbeat/topbeat.yml

The first section if the configuration file allows you to define how often statistics are read from your system and the specific processes to monitor. In our case, the default settings will do just fine.

Moving on, you need to define to where the data will be outputted. By default, Topbeat is configured to output the data to Elasticsearch. If you’re using a locally-installed Elasticsearch instance, this default configuration will suit you just fine:

### Elasticsearch as output elasticsearch: hosts: ["localhost:9200"]

Or, you could ship to Logstash using the default configuration in the ‘Logstash as output’ section. You will need to uncomment the relevant lines.

In our case, though, we’re going to comment out the Elasticsearch output configuration and define a file output configuration. In the File as output section, uncomment the default settings as follows:

### File as output file: path: "/tmp/topbeat" filename: topbeat rotate_every_kb: 10000 number_of_files: 7

Next, in the Logging section, define a log file size limit that, once reached, will trigger an automatic rotation:

logging: files: rotateeverybytes: 10485760

Once done, start Topbeat:

$ sudo /etc/init.d/topbeat start

Setting Up Filebeat

As shown above, Topbeat data can be sent directly to Elasticsearch or forwarded via Logstash. Since we do not yet have a native log shipper for Topbeat, we’re going to use Filebeat to input the file exported by Topbeat into the Logz.io ELK setup (if you’re using the open source ELK Stack, you can skip this step).

First, download and install the Public Signing Key:

$ curl https://packages.elasticsearch.org/GPG-KEY-elasticsearch | sudo apt-key add -

Then, save the repository definition to /etc/apt/sources.list.d/beats.list:

$ echo "deb https://packages.elastic.co/beats/apt stable main" | sudo tee -a /etc/apt/sources.list.d/beats.list

Now, update the system and install Filebeat:

$ sudo apt-get update && sudo apt-get install filebeat

The next step is to download a certificate and move it to the correct location, so first run:

$ wget http://raw.githubusercontent.com/cloudflare/cfssl_trust/master/intermediate_ca/COMODORSADomainValidationSecureServerCA.crt

And then:

$ sudo mkdir -p /etc/pki/tls/certs $ sudo cp COMODORSADomainValidationSecureServerCA.crt /etc/pki/tls/certs/

We now need to configure Filebeat to ship our Topbeat file into Logz.io.

Open the Filebeat configuration file:

$ sudo vim /etc/filebeat/filebeat.yml

Defining the Filebeat Prospector

Prospectors are where we define the files that we want to tail. You can tail JSON files and simple text files. In our case, we’re going to define the path to our Topbeat JSON file.

Please note that when harvesting JSON files, you need to add ‘logzio_codec: json’ to the fields object. Also, the fields_under_root property must be set to ‘true.’ Be sure to enter your Logz.io token in the necessary namespace:

prospectors:

paths:

– /tmp/topbeat/*

fields:

logzio_codec: json

token: UfKqCazQjUYnBN***********************

fields_under_root: true

ignore_older: 24h

A complete list of known types is available here, and if your type is not listed there, please let us know.

Defining the Filebeat Output

Outputs are responsible for sending the data in JSON format to Logstash. In the configuration below, the Logz.io Logstash host is already defined along with the location of the certificate that you downloaded earlier and the log rotation setting:

output: logstash: # The Logstash hosts hosts: ["listener.logz.io:5015"] tls: # List of root certificates for HTTPS server verifications Certificate_authorities: ['/etc/pki/tls/certs/COMODORSADomainValidationSecureServerCA.crt'] logging: # To enable logging to files, to_files option has to be set to true files: # Configure log file size limit. rotateeverybytes: 10485760 # = 10MB

Be sure to put your Logz.io token in the required fields.

Once done, start Filebeat:

$ sudo service filebeat start

Analyzing the Data

Important note! If you’re using the open source ELK Stack, another step is necessary — loading the Topbeat index template in Elasticsearch. Since Logz.io uses dynamic mapping, this step is not necessary in our case. Please refer to Elastic’s documentation for more information.

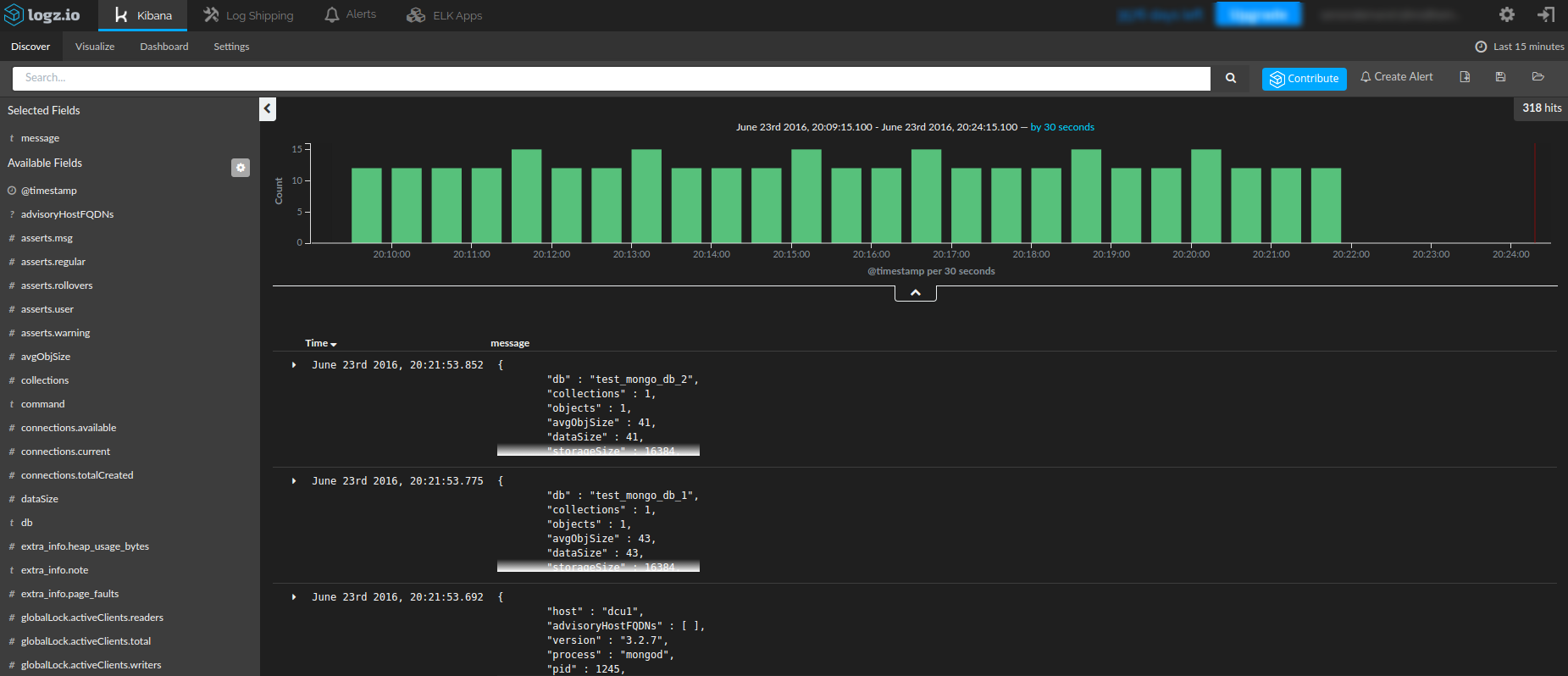

To verify that the pipeline is up and running, access the Logz.io user interface and open the Kibana tab. After a minute or two, you should see a stream of events coming into the system.

You may be shipping other types of logs into Logz.io, so the best way to filter out the other logs is by first opening one of the messages coming in from Topbeat and filtering via the ‘source’ field.

The messages list is then filtered to show only the data outputted by Topbeat:

Start by adding some fields to the messages list. Useful fields are the ‘type’ and ‘host’ fields, especially when monitoring a multi-node environment. This will give you a slightly clearer picture of the messages coming in from Topbeat.

Next, query Elasticsearch. For example, if you’d like to focus on system data, use a field-level search to pinpoint these specific messages:

type:system

Source Types

Our next step is to visualize the data. To do this, we’re going to save the search and then select the Visualize tab in Kibana.

For starters, let’s begin with a simple pie chart that gives us a breakdown of the different source types coming into Elasticsearch from Topbeat. The configuration of this visualization looks like this:

Hit the Play button to preview the visualization:

Memory Usage Over Time

Now, let’s try to create a more advanced visualization — a new line chart that shows memory usage over time. To do this, we’re going to use the saved search for system-type messages (shown above) as the basis for the visualization.

The Y axis in this case will aggregate the average value for the ‘mem.actual_used’ field, and the X axis will aggregate by the ‘@timestamp’ field. We can also add a sub-aggregation to show data for other hosts (in this case, only one host will be displayed).

The configuration of this visualization looks like this:

And the end-result:

Per-Process Memory Consumption

Another example of a visualization that we can create is an area chart comparing the memory consumption for specific processes on our server.

The configuration of this visualization will cross-reference the average values for the ‘proc.mem.rss_p’ field (the Y axis) with a date historgram and the ‘proc.name’ field (X axis).

The configuration looks like this:

And the end-result:

Topbeat Dashboard

After saving the visualizations, it’s time to create your own personalized dashboard. To do this, select the Dashboard tab, and use the + icon in the top-right corner to add your two visualizations.

Now, If you’re using Logz.io, you can use a ready-made dashboard that will save you the time spent on creating your own set of visualizations.

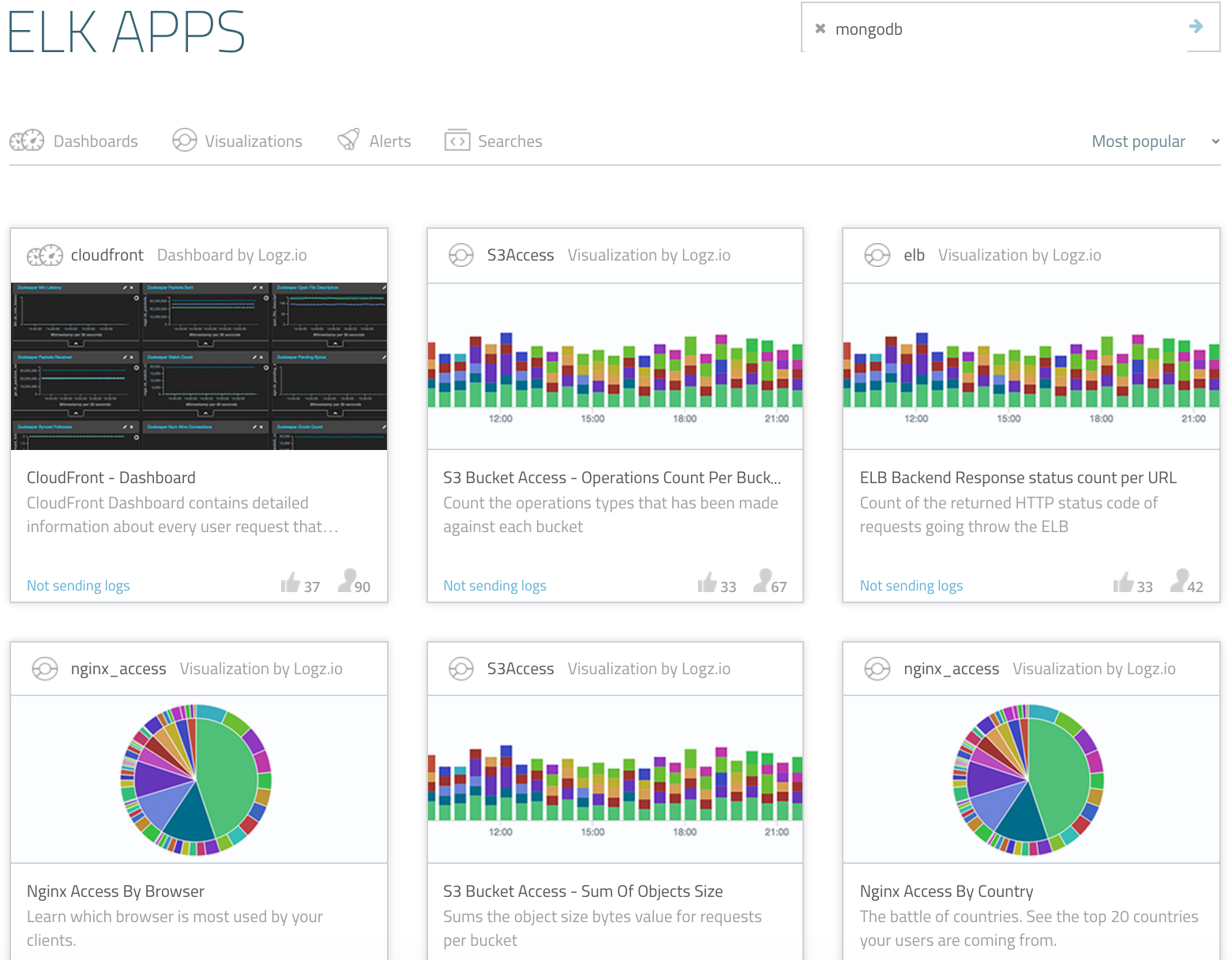

Select the ELK Apps tab:

ELK Apps are free and pre-made visualizations, searches and dashboards customized for specific log types. (You can see the library directly or learn more about them.) Enter ‘Topbeat’ in the search field:

Install the Topbeat dashboard, and then open it in Kibana:

So, in just a few minutes, you can set up a monitoring system for your infrastructure with metrics on CPU, memory, and disk usage as well as per-process stats. Pretty nifty, right?

Logz.io is a predictive, cloud-based log management platform that is built on top of the open-source ELK Stack. Start your free trial today!

Troubleshooting 5 Common ELK Glitches

Getting started with the ELK Stack is straightforward enough and usually includes just a few commands to get all three services up and running. But — and this is big “but” — there are some common issues that can cause users some anguish.

The first piece of good news is that these issues are usually easy to resolve. The other piece of good news is that we’ve put together the top five most-common issues and explained how to troubleshoot them.

#1. Kibana is Unable to Connect to Elasticsearch

You’ve installed Elasticsearch, Logstash, and Kibana. You open the latter in your browser and get the following screen:

All is not lost! This is a pretty common issue, and it can be easily resolved.

As the error message implies, Kibana cannot properly establish a connection with Elasticsearch. The reasons for this vary, but it is usually a matter of defining the Elasticsearch instance correctly in the Kibana configuration file.

Open the file at /opt/kibana/config/kibana.yml and verify that the server IP and host for ‘elasticsearch_url’ are configured correctly (both the URL and port):

Here is an example for a locally-installed Elasticsearch instance:

elasticsearch_url: "http://localhost:9200"

Restart Kibana:

sudo service kibana restart

That should do it. If the problem persists, there may be an issue with Elasticsearch. Check out the Elasticsearch troubleshooting sections below.

#2. Kibana is Unable to Fetch Mapping

In this case, Kibana has established a connection with Elasticsearch but cannot fetch mapping for an index:

As the message displayed on the grey button at the bottom of the page indicates, Kibana cannot find any indices stored in Elasticsearch that match the default logstash-* pattern — the default pattern for data being fed into the system by Logstash (which is the method Kibana assumes you are using).

If you’re not using Logstash to forward the data into Elasticsearch or if you’re using a non-standard pattern in your Logstash configuration, enter the index pattern that matches the name of one or more of your Elasticsearch indices. If Kibana finds the index pattern, the grey button will turn into a pretty green one, allowing you to define the index into Kibana.

If you are using the conventional Logstash configuration to ship data, then there is most likely a communication issue. In other words, your logs aren’t making it into Elasticsearch. For some reason, either Logstash or Elasticsearch may not be running. See the sections below for more details on how to make sure that these services are running properly.

#3. Logstash is Not Running

Logstash can be a tricky component to manage and work with. We’ve previously covered a number of pitfalls you should look out for, but there are a number of reasons that Logstash still may not be running even after taking care to avoid these landmines.

A common issue causing Logstash to fail is a bad configuration. Logstash configuration files, which are located in the /etc/logstash/conf.d directory, follow strict syntax rules that, if broken, will cause a Logstash error. The best way to validate your configurations is to use the configtest parameter in the service command:

$ sudo service logstash configtest

If there’s a configuration error, it’ll show up in the output. Fix the syntax and try to run Logstash again:

$ sudo service logstash restart

Check the status of the service with:

$ sudo service logstash status

If Logstash is still not running after you fix the issue, take a look at the Logstash logs at: /var/log/logstash/logstash.log.

Read the log message and try to fix the issue as reported in the log. Here’s an example of a log message warning us of a deprecated host configuration:

{:timestamp=>"2016-05-30T08:10:42.303000+0000", :message=>"Error: The setting `host` in plugin `elasticsearch` is obsolete and is no longer available. Please use the 'hosts' setting instead. You can specify multiple entries separated by comma in 'host:port' format. If you have any questions about this, you are invited to visit https://discuss.elastic.co/c/logstash and ask.", :level=>:error}As the message itself points out, use the Elastic forums to search for an answer to the particular issue you’ve encountered and as reported in the log.

#4 Logstash is Not Shipping Data

You’ve got Logstash purring like a cat, but there is no data being shipped into Elasticsearch.

The prime suspect in this case is Elasticsearch, which may not be running for some reason or other. You can verify this by running the following cURL:

$ curl 'http://localhost:9200'

You should see the following output in your terminal:

{

"name" : "Jebediah Guthrie",

"cluster_name" : "elasticsearch",

"version" : {

"number" : "2.3.1",

"build_hash" : "bd980929010aef404e7cb0843e61d0665269fc39",

"build_timestamp" : "2016-04-04T12:25:05Z",

"build_snapshot" : false,

"lucene_version" : "5.5.0"

},

"tagline" : "You Know, for Search"

}If Elasticsearch is still not shipping data, skip over to the Elasticsearch troubleshooting section below for more reasons why Elasticsearch might not be running properly.

Another common issue that may be causing this error is a bad output configuration in the Logstash configuration file. Open the configuration file at: /etc/logstash/conf.d/xxx.conf and verify that the Elasticsearch host is configured correctly:

output {

elasticsearch {}

}Restart Logstash:

$ sudo service logstash restart

#5 Elasticsearch is Not Running

How do you know Elasticsearch is not running? There are a number of indicators, and the most obvious one is that no no logs are appearing in Kibana. As specified above, the most reliable way to ping the Elasticsearch service is by cURLing it:

$ curl 'http://localhost:9200'

If all is well, you should see the following output in your terminal:

{

"name" : "Jebediah Guthrie",

"cluster_name" : "elasticsearch",

"version" : {

"number" : "2.3.1",

"build_hash" : "bd980929010aef404e7cb0843e61d0665269fc39",

"build_timestamp" : "2016-04-04T12:25:05Z",

"build_snapshot" : false,

"lucene_version" : "5.5.0"

},

"tagline" : "You Know, for Search"

}If not, the output will look like this:

curl: (7) Failed to connect to localhost port 9200: Connection refused

Now, there are a number of possible reasons Elasticsearch is not running.

First, if you just installed Elasticsearch, you need to manually start the service because it is not started automatically upon installation:

$ sudo service elasticsearch start * elasticsearch is running

If you still get a message that Elasticsearch is not running, you will have to dig in deeper. As with Logstash, the best place to try and debug the service is the log file: /var/log/elasticsearch/elasticsearch.log.

A common cause for a failing Elasticsearch is a bad host definition in the configuration file. Live tailing of the log file while starting the service is a good method for identifying a specific error. Here is an example:

2016-05-30 07:40:36,799][ERROR][bootstrap] [Condor] Exception BindTransportException[Failed to bind to [9300-9400]]; nested: ChannelException[Failed to bind to: /192.0.0.1:9400]; nested: BindException[Cannot assign requested address];

The host configuration is located in the Network section of the Elasticsearch configuration file, and it should look like this:

//When Kibana and Elasticsearch are hosted on the same machine network.host: localhost http.port: 9200 //When Kibana and Elasticsearch are hosted on different machines network.bind_host: 0.0.0.0 http.port: 9200 network.publish_host: <ServerIP>

Verify the configuration, and restart the service:

$ sudo service elasticsearch restart

If the issue is not the host definition, the log will give you an indication as to the cause of the error and will help you resolve it. Search the Elastic forums — the chances are that someone else has encountered the issue before.

And one last tip (on Ubuntu only): If you had Elasticsearch working properly and it suddenly it stopped, this might be due to a restart of your server as Elasticsearch is not configured to start on boot. To change this, you can use:

$ sudo update-rc.d elasticsearch defaults 95 10

A Final Note

Here at Logz.io, we’ve had a lot of experience with troubleshooting the various quirks in the ELK Stack. This article covered some common and basic setup issues that newcomers to the system might encounter. More advanced tips can be found in these Elasticsearch and Logstash cheatsheets.

Happy indexing!

Logz.io is a predictive, cloud-based log management platform that is built on top of the open-source ELK Stack. Start your free trial today!

7 DockerCon Speakers Pick the Top Sessions You Shouldn’t Miss

The speed in which Docker and container technology have become an integral part of application development and deployment is remarkable. While Docker is young (it recently celebrated it’s third birthday), and many still question its suitability for production and the enterprise, its high adoption rate, countless large production use cases, and soaring popularity all point to a bright future.

These trends will culminate next week at DockerCon 2016 as thousands will flock to Seattle to hear about the latest Docker developments and learn from users about their Docker stories.

With so many great sessions and activities taking place, it’s will be tough to select where it will be best to invest your time. So, we asked some of our favorite DockerCon speakers to tell us what session they’re looking forward to most of all (and be sure to check out the sessions they are giving as well, we selected them for a reason!). Click on the links in their names to follow their thoughts on Twitter!

![robert mcfrazier]() #1 Robert McFrazier, Solutions Engineer at Google

#1 Robert McFrazier, Solutions Engineer at Google

Robert’s session at DockerCon is: Making it Easier to Contribute to Open Source Projects using Docker Containers

His pick is:

Docker for Mac and Windows

Docker for Mac and Windows were released in beta in March, and provide lots of new features that users have been clamouring for including: file system notifications, simpler file sharing, and no Virtualbox hassles.

During this talk, I will give the inside guide to how these products work. We will look at all the major components and how they fit together to make up the product. This includes a technical deep dive covering the hypervisors for OSX and Windows, the custom file sharing code, the networking, the embedded Alpine Linux distribution, and more.

Speaker: Justin Cormack, Engineer, Docker

Monday June 20, 2016 2:55pm – 3:40pm

Ballroom 6C

Why Robert recommends this session:

“Mac and Windows are probably the two most popular environments for Docker containers development. Looking forward to hearing how Docker works and finding new tips/tricks for Docker on these operating systems.”

![laura frank]() #2 Laura Frank, Software Engineer at Codeship

#2 Laura Frank, Software Engineer at Codeship

Laura will be giving two talks at DockerCon: Curated Birds of the Feather and Efficient Parallel Testing with Docker.

Her first pick for DockerCon is:

Containerd: Building a Container Supervisor

Containerd is a container supervisor that allows users to manage the lifecycle of a container as well as interact with the container while it is executing. Containerd was built to fulfill many of the requirements that we expect from a modern supervisor all while staying small and fast. In this talk, we will discuss some of the design decisions that shaped containerd’s architecture that allows it to reattach to running containers if it was killed and how it is designed to start 100s containers in seconds.

Speaker: Michael Crosby, Engineer, Docker

Monday June 20, 2016 4:25pm – 5:10pm

Ballroom 6C

Why Laura recommends this session:

“If you want to get further into the guts of containerization and see the direction that Docker is heading in, you can’t miss this talk. I’ll be in the front row, holding up a giant “I’m a Contai-Nerd” sign.”

Her second pick is:

Docker for Mac and Windows

Docker for Mac and Windows were released in beta in March, and provide lots of new features that users have been clamouring for including: file system notifications, simpler file sharing, and no Virtualbox hassles.

During this talk, I will give the inside guide to how these products work. We will look at all the major components and how they fit together to make up the product. This includes a technical deep dive covering the hypervisors for OSX and Windows, the custom file sharing code, the networking, the embedded Alpine Linux distribution, and more.

Speaker: Justin Cormack, Engineer, Docker

Monday June 20, 2016 2:55pm – 3:40pm

Ballroom 6C

Why Laura recommends this session:

“Running Docker on non-Linux systems has been getting easier over the last year with the introduction of Docker Toolbox. But sometimes, the performance of VirtualBox stood in the way of productivity. Docker for Mac and Windows promises to ease a lot of the pain points associated with Docker Toolbox and VirtualBox, and I’m super jazzed to hear all the nerdy technical details. I also majorly love Alpine Linux, so I’m glad it’s getting a lot of attention from Docker.”

Her third pick:

The Dockerfile Explosion and the Need for Higher Level Tools

Dockerfiles are great. They provide a zero-barrier-to-entry format for describing a single Docker image which is immediately clear to anyone reading them. But with that simplicity comes problems that become apparent as your adoption of Docker gathers pace.

- Dockerfiles can inherit from other docker images, but images are not Dockerfiles

- Dockerfile provides no built-in mechanism for creating abstractions, so as usage grows identical or similar instructions can be duplicated across many files

- The Docker APi exposes a build endpoint, but the API is very course, taking Dockerfile as the transport rather than exposing the individual instructions

- Dockerfiles are just that, files. So they can come from anywhere

The one layer per line in a Dockerfile limitation can lead to an explosion of layers, which fail to take advantage of the promised space and performance benefits.

Speaker: Gareth Rushgrove, Senior Software Engineer, Puppet Labs

Monday June 20, 2016 2:55pm – 3:40pm

Ballroom 6A

Why Laura recommends this session:

“Optimizing Dockerfiles is a casual hobby mine, but I realize most people don’t share that passion. Learning how to write your Dockerfiles to take advantage of caching, determining whether to use ADD or COPY, and discovering other ways to trim down the size of your Docker images may seem like an insurmountable task, but this talk promises lots of practical and actionable advice.”

![andrey sibiryov]() #3 Andrey Sibiryov, Sr. Infrastructure Engineer at Uber

#3 Andrey Sibiryov, Sr. Infrastructure Engineer at Uber

Andrey’s session at DockerCon is: Sharding Containers: Make Go Apps Computer-Friendly Again

His first pick is:

Microservices + Events + Docker = A Perfect Trio

Microservices are an essential enabler of agility but developing and deploying them is a challenge. In order for microservices to be loosely coupled, each service must have its own datastore. This makes it difficult to maintain data consistency across services.

Deploying microservices is also a complex problem since an application typically consists of 10s or 100s of services, written in a variety of languages and frameworks.

In this presentation, you will learn how to solve these problems by using an event-driven architecture to maintain data consistency and by using Docker to simplify deployment.

Speaker: Chris Richardson, Founder, Eventuate

Monday June 20, 2016 2:00pm – 2:45pm

Ballroom 6A

Why Andrey recommends this session:

“I think that event-sourced systems can be one of the next big things in the SoA & microservices world so I want to attend this one to see the overall direction.”

His second pick is:

The Golden Ticket: Docker and High-Security Microservices

True microservices are more than simply bolting a REST interface on your legacy application, packing it in a Docker container and hoping for the best. Security is a key component when designing and building out any new architecture, and it must be considered from top to bottom. Umpa Lumpas might not be considered “real” microservices, but Willy Wonka still has them locked down tight!

In this talk, Aaron will briefly touch on the idea and security benefits of microservices before diving into practical and real world examples of creating a secure microservices architecture. We’ll start with designing and building high security Docker containers, using and examining the latest security features in Docker (such as User Namespaces and seccomp-bpf) as well as examine some typically forgotten security principals. Aaron will end on exploring related challenges and solutions in the areas of network security, secrets management and application hardening. Finally, while this talk is geared towards Microservices, it should prove informational for all Docker users, building a PaaS or otherwise.

Speaker: Aaron Grattafiori, Technical Director, NCC Group

Monday June 20, 2016 5:20pm – 6:05pm

Ballroom 6C

Why Andrey recommends this session:

“There were many security-related innovations around Docker in the past year and I missed it all – this is a great opportunity to catch up.”

His third pick is:

Unikernels and Docker: From Revolution to Evolution

Unikernels are a growing technology that augment existing virtual machine and container deployments with compact, single-purpose appliances. Two main flavors exist: clean-slate unikernels, which are often language specific, such as MirageOS (OCaml) and HaLVM (Haskell), and more evolutionary unikernels that leverage existing OS technology recreated in library form, notably Rump Kernel used to build Rumprun unikernels.

To date, these have been something of a specialist’s game: promising technology that requires considerable effort and expertise to actually deploy. After a brief introduction for newcomers to unikernels, Mindy will demonstrate the great strides that have been taken recently to integrate unikernels with existing deployments. Specifically, we will show various ways in which Rumprun and MirageOS unikernels can be used to deploy a LAMP stack, all managed using the popular Docker toolchain (Docker build, Docker run, and the Docker Hub). The result is unikernels that can be used to augment and evolve existing Linux container- and VM-based deployments, one microservice at a time. We no longer need a revolution—welcome to the microservice evolution!

Speaker: Mindy Preston, Software Engineer, Docker

Tuesday June 21, 2016 2:25pm – 3:10pm

Ballroom 6C

Why Andrey recommends this session:

“Unikernels are probably still the most controversial and at the same time super-exciting topic at the bleeding edge of modern infrastructure. Definitely worth attending.”

![anna ossowski]() #4 Anna Ossowski, Community Manager at Eldarion

#4 Anna Ossowski, Community Manager at Eldarion

Anna will be giving this session at DockerCon: Be(com) a Mentor! Help Others Succeed and will participate in this panel: Open Source and Burnout – How Can We as a Community Help?

Anna’s first pick for DockerCon is:

Making it Easier to Contribute to Open Source Projects using Docker Containers

Making it easy to contribute to open source project using Docker containers, by lowering the system admin required to get started. Also making it easy “try” out new technology.

Speaker: Robert McFrazier, Solution Engineer, Google

Monday June 20, 2016 4:25pm – 5:10pm

Room 609

Why Anna recommends this session:

“I’m an advocate for new open source contributors and I look forward to hearing Robert’s ideas on how to break down some of the contribution barriers that old and new open source contributors have to face sometimes and how we can make it easier for them to contribute to open source using Docker containers.”

Her second pick is:

Open Source is Good for Both Business and Humanity

Sharing and collaborating on source code started decades ago but has increased remarkably the past twenty years. We’ve seen a large increase in better software, better solutions and better code.

Learn why that happened, and how organizations, companies and humanity is benefiting from Open Source.

Speaker: Jonas Rosland, Developer Advocate, EMC {code}

Tuesday June 21, 2016 11:15am – 12:00pm

Room 609

Why Anna recommends this session:

“I look forward to learning about the history and progress of open source and open source collaboration, learning about the reasons for this process, and finding out more about how companies benefit from open source from Jonas’s perspective.”

Her third pick is:

Making Friendly Microservices

Small is the new big, and for good reason. The benefits of microservices and service-oriented architecture have been extolled for a number of years, yet many forge ahead without thinking of the impact the users of the services. Consuming on micro services can be enjoyable as long as the developer experience has been crafted as finely as the service itself. But just like with any other product, there isn’t a single kind of consumer. Together we will walk through some typical kinds of consumers, what their needs are, and how we can create a great developer experience using brains and tools like Docker.

Speaker: Michele Titolo, Lead Software Engineer, Capital One

Tuesday June 21, 2016 1:30pm – 2:15pm

Ballroom 6A

Why Anna recommends this session:

“I don’t know much about microservices yet and would love to learn more about them. I also look forward to learning about different kinds of consumers from Michele and finding out more about her approach of making the process of developing microservices friendly, both for the consumer and the developer.”

![chris richardson]() #6 Chris Richardson, Founder at Eventuate.io

#6 Chris Richardson, Founder at Eventuate.io

Chris’ session at DockerCon is: Microservices + Events + Docker = A Perfect Trio.

His pick:

Thinking Inside the Container: A Continuous Delivery Story

Riot builds a lot of software. At the start of 2015 we were looking at 3000 build jobs over a hundred different applications and dozens of teams. We were handling nearly 750 jobs per hour and our build infrastructure needed to grow rapidly to meet demand. We needed to give teams total control of the “stack” used to build their applications and we needed a solution that enabled agile delivery to our players. On top of that, we needed a scalable system that would allow a team of four engineers to support over 250.

After as few explorations, we built an integrated Docker solution using Jenkins that accepts docker images submitted as build environments by engineers around the company . Our “containerized” farm now creates over 10,000 containers a week and handles nearly 1000 jobs at a rate of about 100 jobs an hour.

In this occasionally technical talk, we’ll explore the decisions that led Riot to consider Docker, the evolutionary stages of our build infrastructure, and how the open source and in-house software we combined to achieve our goals at scale. You’ll come away with some best practices, plenty of lessons learned, and insight into some of the more unique aspects of our system (like automated testing of submitted build environments, or testing node.js apps in containers with Chromium and xvfb).

Speaker: Maxfield Stewart, Engineering Manager, Riot Games

Monday June 20, 2016 2:55pm – 3:40pm

Ballroom 6B

![michele titolo]() #7 Michelle Titolo, Lead Software Engineer at Capital One

#7 Michelle Titolo, Lead Software Engineer at Capital One

Michelle’s session at DockerCon is: Making Friendly Microservices

Her first pick:

The Golden Ticket: Docker and High Security Microservices

True microservices are more than simply bolting a REST interface on your legacy application, packing it in a Docker container and hoping for the best. Security is a key component when designing and building out any new architecture, and it must be considered from top to bottom. Umpa Lumpas might not be considered “real” microservices, but Willy Wonka still has them locked down tight!

In this talk, Aaron will briefly touch on the idea and security benefits of microservices before diving into practical and real world examples of creating a secure microservices architecture. We’ll start with designing and building high security Docker containers, using and examining the latest security features in Docker (such as User Namespaces and seccomp-bpf) as well as examine some typically forgotten security principals. Aaron will end on exploring related challenges and solutions in the areas of network security, secrets management and application hardening. Finally, while this talk is geared towards Microservices, it should prove informational for all Docker users, building a PaaS or otherwise.

Speaker: Aaron Grattafiori, Technical Director, NCC Group

Monday June 20, 2016 5:20pm – 6:05pm

Ballroom 6C

Why Michelle recommends this session:

“I’m interested in learning more related to secure containers and microservices.”

Her second pick:

Be(come) a Mentor! Help Others Succeed

There is always something new to learn in technology. We are always experts in one and beginners in another field. In order to learn successfully it’s important to have a mentor but it’s equally important to learn how to be a good mentor. In my talk we’ll explore what a mentor is, why we should all be(come) mentors, tips and tricks of mentorship, and concrete ways you can get involved as a mentor.

There will be some Star Wars, too!

Speaker: Anna Ossowski, Community Manager, Eldarion

Monday June 20, 2016 5:20pm – 6:05pm

Room 609

Why Michelle recommends this session:

“I love hearing about other people’s strategies for mentoring, since it’s so imperative to being an effective leader and engineer.”

If you’re attending DockerCon, be sure to drop by Booth E5 to get a demo on how Logz.io provides the ELK Stack as an end-to-end enterprise-grade service!

5 Docker Monitoring Solutions on Show at DockerCon 2016

SEATTLE, Washington — Docker is being used in more and more production deployments. As such, the ecosystem surrounding Docker is picking up the gauntlet by creating more and more solutions for monitoring — which is crucial for keeping tabs on a Dockerized environment and gaining visibility into the state and health of containers.

With so many platforms available, it’s tough to tell the difference. Nothing exemplifies this than DockerCon 2016. While walking through the Expo Hall yesterday, I counted at least ten companies professing to do Docker monitoring on some level or another. I decided to see how five of these companies differentiated themselves from their competitors by interviewing their representatives.

Here is what I found.

Dynatrace

Dynatrace offers a powerful solution for Docker monitoring by providing users with high-level metrics that are crucial from a business perspective together with extremely detailed insights on containerized services.

Docker users will see information that is specific to images and containers such as the numbers of images being used, running containers, and per-microservice metrics. Dynatrace’s Smartscape feature shows a map of what component in your stack relies on what — helping you to understand the relationships between all the different layers (including your Docker containers themselves).

I spoke with Pawel Brzoska, a member of Dynatrace’s product management team, who pointed out that as opposed to other Docker monitoring tools, his company focuses on metrics that are generated on the client-side as well: “Our focus is on measuring user-experience as well, so we explore whether the actual users of the application are happy by measuring metrics on the client-side such as page load.

Datadog

Datadog is a cloud-based solution that monitors the infrastructure behind your applications, tracking metrics and events that shipped via agents, APIs, or even third-party tools (including some of the other monitoring tools listed here) from databases, configuration management tools, cloud services, and, yes, Docker. Good guess.

For Docker environments, Datadog supplies an agent that can be either installed or run as a container on your host. The agent connects via the Docker socket and aggregates docker-stats data, which can be analyzed using a dedicated dashboard and sliced and diced per container.

At DockerCon, a new feature called “Service Discovery” was announced that enables Datadog to identify the type of containers running on the host and the ports being assigned to it automatically.

Datadog’s strengths are both the ability to combine data from various inputs and the integrations it has with Docker orchestration and management tools such as Kubernetes, Mesos, and Swarm. Ilan Rabinovitch, Director of Technical Community at Datadog, agrees: “Strong integrations with Kubernetes and Swarm are tighter than others in this specific space. Due to the dynamic nature of the cloud and the realm of microservices, what is important is the ability to combine data sources and data types.”

SignalFx

SignalFx has a very interesting story to tell, based on the number of open source technologies that have been deployed on Docker in production since 2013. I chatted with software engineer Maxime Petazzoni, who was very clear on the value he sees SignalFx bringing to the table.

SignalFx allows users to perform advanced diagnostics using built-in computations and functions for real-time analysis and with extremely low latency. Real-time was indeed real-time — seeing the needles in their monitoring dashboards move every second was impressive.

SignalFx provides a number of dashboards for Docker including a per-host dashboard and a general dashboard showing data on all of the containers across hosts, making it relatively simple to drill down and troubleshoot a problematic container.

So, the value here is clear. As Maxime puts it: “As a software engineer, I see the benefit when a system can perform this kind of computation for monitoring modern applications across different time series with extremely low latency and in real-time.”

Sysdig

Sysdig is a different kind of beast.

Started as an open source project that focuses on monitoring microservices, Sysdig “sees inside your containers without instrumenting them,” says Daniel Liong, a member of the Sysdig product team.

What this means is that instead of installing an agent on your Docker host, the Sysdig agent sits at the operating system level — so instead of looking from the inside, Sysdig looks at the containers from “the outside.”

The agent looks into the actual system calls made to the kernel (system, network, disk I/O), allowing you to understand the processes that are running in the system and the services running in a container.

The results of this “container-native monitoring” are presented in a monitoring dashboard that displays a wide array of visualizations representing container-specific performance and monitoring metrics.

New Relic

New Relic is more application- and server-centric, enabling users to drill down per server from the application level to containers.

Users can see key metrics on each container by sorting them by CPU or memory. This data can then be integrated with New Relic’s Insights feature to query and visualize the collected data on your Docker environment in real-time.

Mike Panchenko, the founder of Opsmatic (which was acquired by New Relic), sees plenty of competition in the world of Docker monitoring and told me that the main difference between New Relic and others is the context that it provides: “The best tool that lets you slice and dice infrastructure the best way will win. The tool needs to be flexible enough to be suited to the user’s environment.”

Last, But Not Least…

I would be remiss if I didn’t mention our own Docker Log Collector, which offers a different type of solution. This method latches on to the Docker API and ships Docker logs, statistics, and dameon events to the Logz.io ELK Stack. This data can then be analyzed and visualized using Elasticsearch’s powerful querying capabilities and Kibana’s visualization layer. Learn more about our Docker log collector in this blog post.

What monitoring solutions impressed you at Dockercon 2016? Tell us your favorites in the comments below!

10 Elasticsearch Concepts You Need to Learn

Getting acquainted with ELK lingo is one of the first things you’re going to have to do when starting out with the stack. Just like with any programming language, there are some basic concepts that once internalized, make the learning curve less traumatic.

We’ve put together ten of the most important concepts you’re going to want to understand. While the concepts apply specifically to Elasticsearch, they are also are important to understand when operating the stack as a whole. When applicable — and to make it easier to understand — we will compare concepts to parallel terms in the world of relational databases.

1. Fields

Fields are the smallest individual unit of data in Elasticsearch. Each field has a defined type and contains a single piece of data that can be, for example, a boolean, string or array expression. A collection of fields are together a single Elasticsearch document.

Starting with Elasticsearch version 2.X, field names cannot start with special characters and cannot contain dots.

2. Documents

Documents are JSON objects that are stored within an Elasticsearch index and are considered the base unit of storage. In the world of relational databases, documents can be compared to a row in table.

For example, let’s assume that you are running an e-commerce application. You could have one document per product or one document per order. There is no limit to how many documents you can store in a particular index.

Data in documents is defined with fields comprised of keys and values. A key is the name of the field, and a value can be an item of many different types such as a string, a number, a boolean expression, another object, or an array of values.

Documents also contain reserved fields that constitute the document metadata such as:

- _index – the index where the document resides

- _type – the type that the document represents

- _id – the unique identifier for the document

An example of a document:

{

"_id": 3,

“_type”: [“user”],

"age": 28,

"name": ["daniel”],

"year":1989,

}

3. Types

Elasticsearch types are used within documents to subdivide similar types of data wherein each type represents a unique class of documents. Types consist of a name and a mapping (see below) and are used by adding the _type field. This field can then be used for filtering when querying a specific type.

An index can have any number of types, and you can store documents belonging to these types in the same index.

4. Mapping

Like a schema in the world of relational databases, mapping defines the different types that reside within an index. It defines the fields for documents of a specific type — the data type (such as string and integer) and how the fields should be indexed and stored in Elasticsearch.

A mapping can be defined explicitly or generated automatically when a document is indexed using templates. (Templates include settings and mappings that can be applied automatically to a new index.)

# Example

curl -XPUT localhost:9200/example -d '{

"mappings": {

"mytype": {

"properties": {

"name": {

"type": "string"

},

"age": {

"type": "long"

}

}

}

}

}'

5. Index

Indices, the largest unit of data in Elasticsearch, are logical partitions of documents and can be compared to a database in the world of relational databases.

Continuing our e-commerce app example, you could have one index containing all of the data related to the products and another with all of the data related to the customers.

You can have as many indices defined in Elasticsearch as you want. These in turn will hold documents that are unique to each index.

Indices are identified by lowercase names that refer to actions that are performed actions (such as searching and deleting) against the documents that are inside each index.

6. Shards

Put simply, shards are a single Lucene index. They are the building block of Elasticsearch and are what facilitate its scalability.

Index size is a common cause of Elasticsearch crashes. Since there is no limit to how many documents you can store on each index, an index may take up an amount of disk space that exceeds the limits of the hosting server. As soon as an index approaches this limit, indexing will begin to fail.

One way to counter this problem is to split up indices horizontally into pieces called shards. This allows you to distribute operations across shards and nodes to improve performance.

When you create an index, you can define how many shards you want. Each shard is an independent Lucene index that can be hosted anywhere in your cluster:

# Example

curl -XPUT localhost:9200/example -d '{

"settings" : {

"index" : {

"number_of_shards" : 2,

"number_of_replicas" : 1

}

}

}'

7. Replicas

Replicas, as the name implies, are Elasticsearch fail-safe mechanisms and are basically copies of your index’s shards. This is a useful backup system for a rainy day — or, in other words, when a node crashes. Replicas also serve read requests, so adding replicas can help to increase search performance.

To ensure high availability, replicas are not placed on the same node as the original shards (called the “primary” shard) from which they were replicated.

Like with shards, the number of replicas can be defined per index when the index is created. Unlike shards, however, you may change the number of replicas anytime after the index is created.

See the example in the “Shards” section above.

8. Analyzers

Analyzers are used during indexing to break down phrases or expressions into terms. Defined within an index, an analyzer consists of a single tokenizer and any number of token filters. For example, a tokenizer could split a string into specifically defined terms when encountering a specific expression.

By default, Elasticsearch will apply the “standard” analyzer, which contains a grammar-based tokenizer that removes common English words and applies additional filters. Elasticsearch comes bundled with a series of built-in tokenizers as well, and you can also use a custom tokenizer.

A token filter is used to filter or modify some tokens. For example, a ASCII folding filter will convert characters like ê, é, è to e.

# Example

curl -XPUT localhost:9200/example -d '{

"mappings": {

"mytype": {

"properties": {

"name": {

"type": "string",

"analyzer": "whitespace"

}

}

}

}

}'

9. Nodes

The heart of any ELK setup is the Elasticsearch instance, which has the crucial task of storing and indexing data.

In a cluster, different responsibilities are assigned to the various node types:

- Data nodes — stores data and executes data-related operations such as search and aggregation

- Master nodes — in charge of cluster-wide management and configuration actions such as adding and removing nodes

- Client nodes — forwards cluster requests to the master node and data-related requests to data nodes

- Tribe nodes — act as a client node, performing read and write operations against all of the nodes in the cluster

- Ingestion nodes (this is new in Elasticsearch 5.0) — for pre-processing documents before indexing

By default, each node is automatically assigned a unique identifier, or name, that is used for management purposes and becomes even more important in a multi-node, or clustered, environment.

When installed, a single node will form a new single-node cluster entitled “elasticsearch,” but it can also be configured to join an existing cluster (see below) using the cluster name. Needless to say, these nodes need to be able to identify each other to be able to connect.

In a development or testing environment, you can set up multiple nodes on a single server. In production, however, due to the amount of resources that an Elasticsearch node consumes, it is recommended to have each Elasticsearch instance run on a separate server.

10. Cluster

An Elasticsearch cluster is comprised of one or more Elasticsearch nodes. As with nodes, each cluster has a unique identifier that must be used by any node attempting to join the cluster. By default, the cluster name is “elasticsearch,” but this name can be changed, of course.

One node in the cluster is the “master” node, which is in charge of cluster-wide management and configurations actions (such as adding and removing nodes). This node is chosen automatically by the cluster, but it can be changed if it fails. (See above on the other types of nodes in a cluster.)

Any node in the cluster can be queried, including the “master” node. But nodes also forward queries to the node that contains the data being queried.

As a cluster grows, it will reorganize itself to spread the data.

There are a number of useful cluster APIs that can query the general status of the cluster.

For example, the cluster health API returns health status reports of either “green” (all shards are allocated), “yellow” (the primary shard is allocated but replicas are not), or “red” (the shard is not allocated in the cluster). More about cluster APIs is here.

# Output Example

{

"cluster_name" : "elasticsearch",

"status" : "yellow",

"timed_out" : false,

"number_of_nodes" : 1,

"number_of_data_nodes" : 1,

"active_primary_shards" : 5,

"active_shards" : 5,

"relocating_shards" : 0,

"initializing_shards" : 0,

"unassigned_shards" : 5,

"delayed_unassigned_shards" : 0,

"number_of_pending_tasks" : 0,

"number_of_in_flight_fetch" : 0,

"task_max_waiting_in_queue_millis" : 0,

"active_shards_percent_as_number" : 50.0

}

A Final Note

These are the main concepts you should understand when getting started with ELK, but there are other components and terms as well. We couldn’t cover them all, so I recommend referring to Elastic for additional information.

Happy indexing!

Logz.io is a predictive, cloud-based log management platform that is built on top of the open-source ELK Stack, which includes Elasticsearch. Start your free trial today!

Finding the Needle in a Haystack: Anomaly Detection with the ELK Stack

The following scenario might sound familiar.

It’s the middle of the night. Your mobile starts vibrating. On the other end, it’s a frantic customer (or your boss) complaining that the website is down. You enter crisis mode and start the troubleshooting process, which involves ingesting a large amount of caffeine and — usually — ends with the problem being solved.

Sometimes, this scenario can be avoided by using the correct logging and monitoring strategy. Centralized logging, for example, is a useful strategy to implement but not easy to use. The biggest challenge, of course, is sifting through the huge volumes of log data that come into the system and identifying correlations and anomalies.

One of the reasons that the ELK Stack is so popular is that it is a very useful tool for streaming large sets of data from different sources, identifying correlations using queries and searches, and creating rich visualizations and monitoring dashboards on top of them.

This post describes a simple workflow process to troubleshoot a website crash by analyzing different types of logs and finding a correlation between them. To do this, we will use the enterprise-grade ELK Stack hosted by Logz.io — but most of the described steps can be performed with any installation of open source ELK.

The scenario that I will use consists of a simple web application that is based on an Apache web server and MySQL database. In this case, the ELK Stack is ingesting Apache logs, database logs, and server performance metrics using the Logz.io performance agent.

It’s crisis time

To start the troubleshooting process, we’re going to access our Kibana interface and begin querying Elasticsearch for relevant log messages.

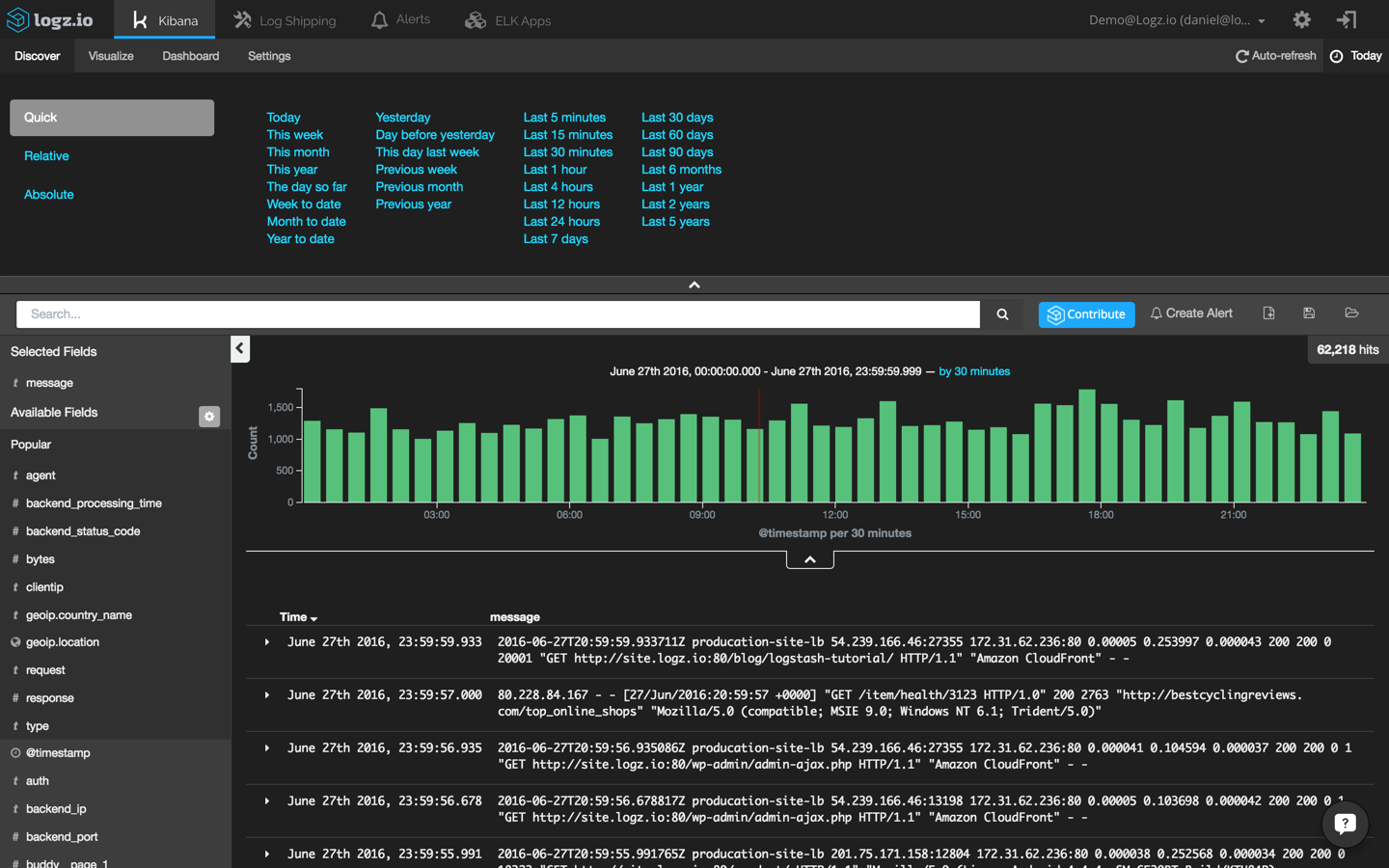

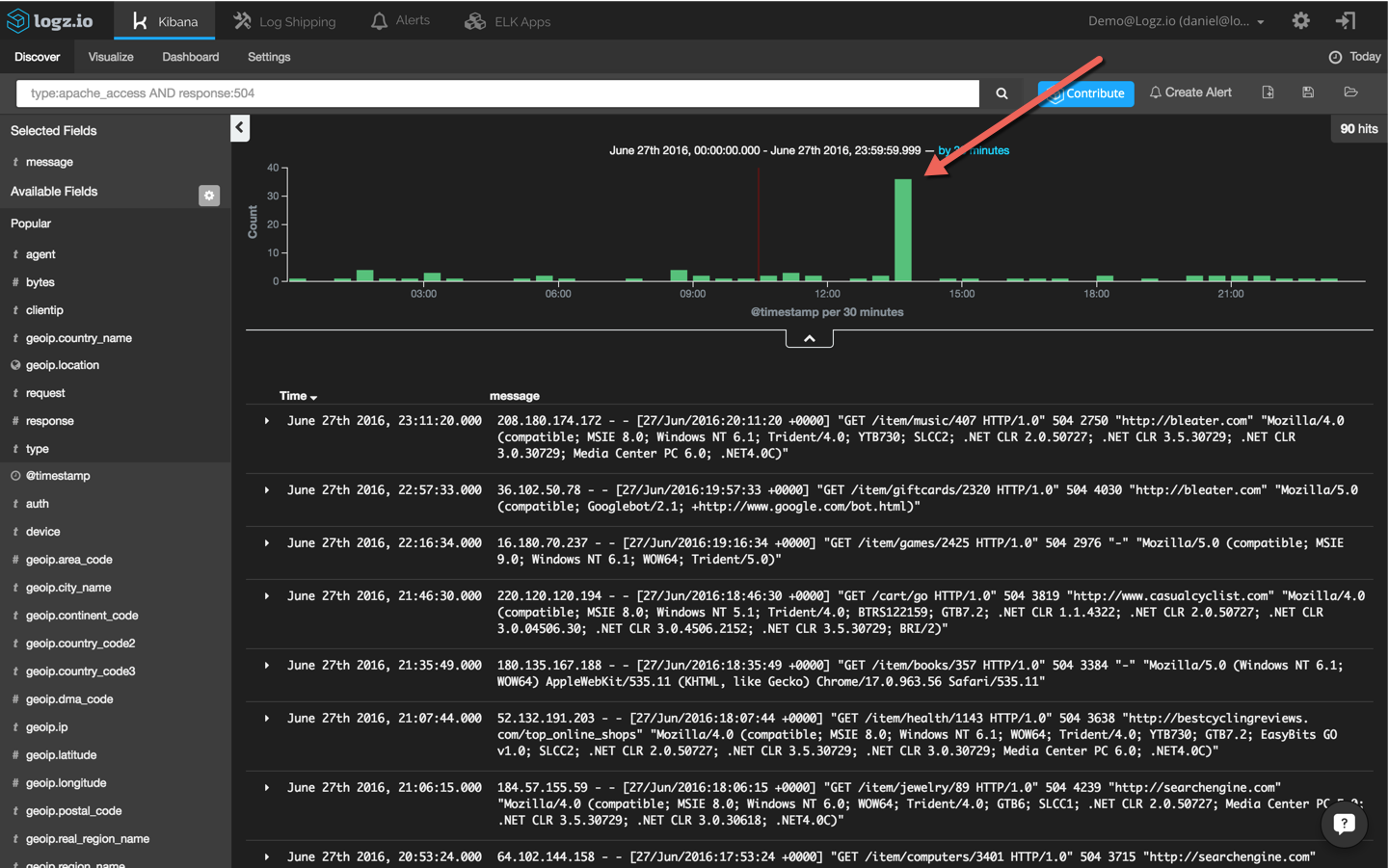

First, I’m going to set the time frame to “Today” to get an overview of all of the recent events being logged:

In this case, I can see more than 60,000 log messages within this time frame. Not very useful, right? It’s time to try and find our needle in the haystack by querying Elasticsearch in Kibana.

Querying is Kibana’s bread and butter and an art unto itself, but the truth is that it is not always easy. I’d recommend reading up on the topic in our Kibana tutorial to acquaint yourself with the various types of searches.

Now, since we’re investigating a site crash, a good place to start would be our Apache logs (you can also read more here about using the ELK Stack as an Apache log analyzer). Specifically, we’re going to use a field-level search to pinpoint Apache error messages:

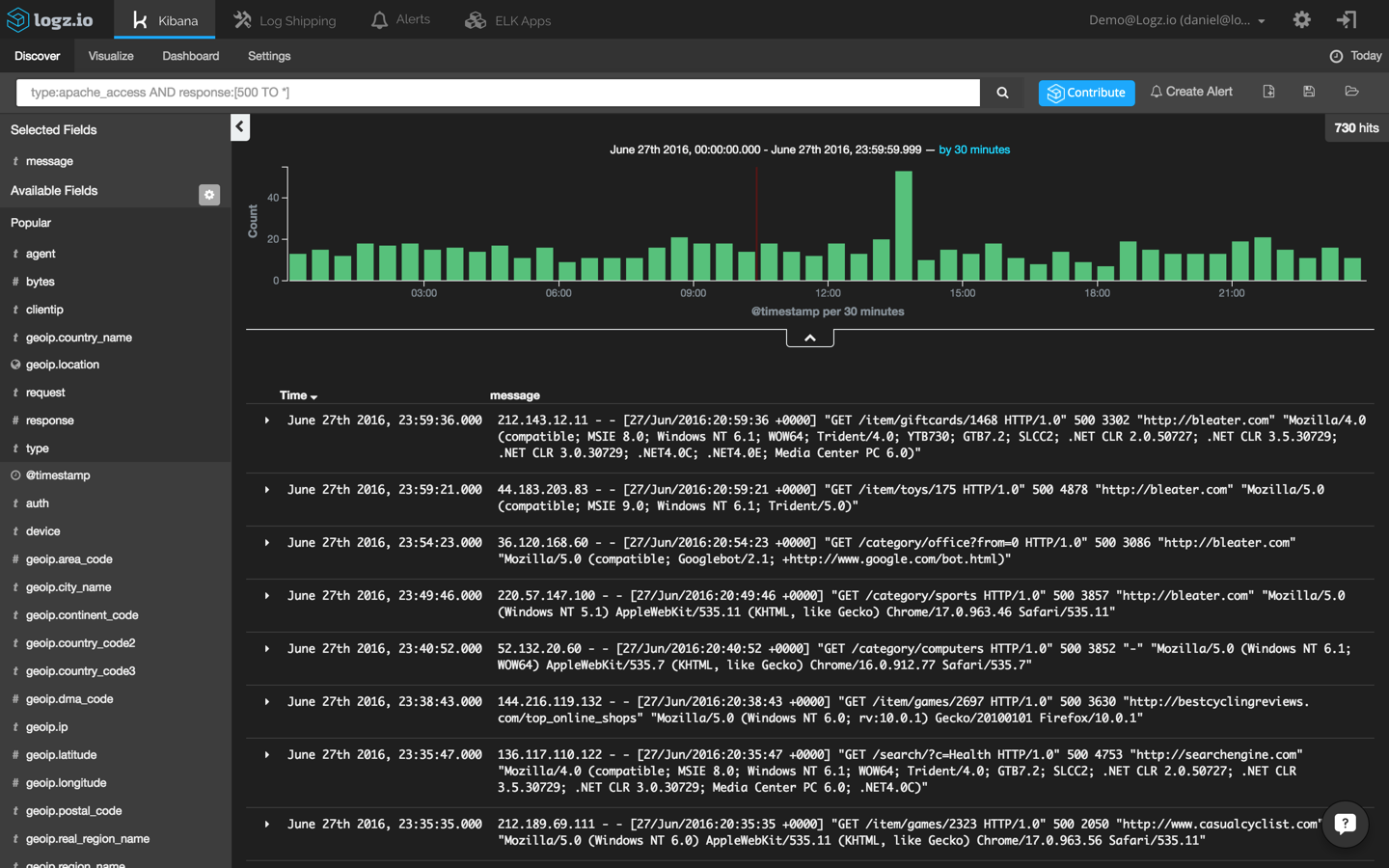

type:apache_access AND response:[500 TO *]

This returns a list of 730 Apache errors with response codes of 500 and above:

Our haystack is still a bit too large to be able to return useful information. To narrow it down further, I’m going to have to be more specific and focus on 504 responses:

type:apache_access AND response:504

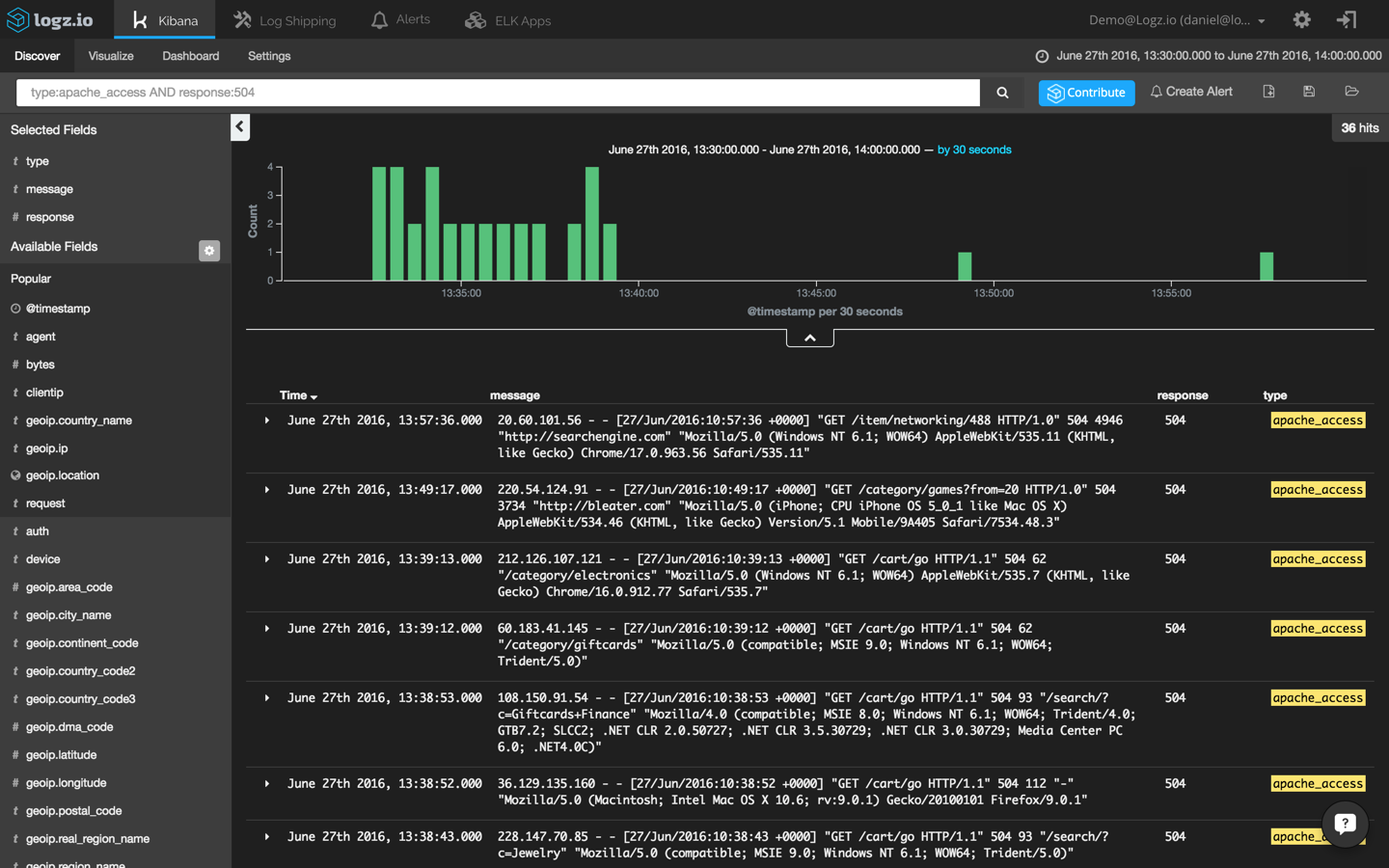

Now, we’re talking. This search results in an obvious and visible peak in events. To drill down further, I’m going to click on the bucket and add the ‘response’ and ‘type’ fields to the message list to make the list of events more readable:

If we select one of the displayed log messages, we will get a detailed view of the event — a list of the message fields and their values (select the JSON tab to see the message in JSON format):

{

"_index": "logz-xmywsrkbovjddxljvcahcxsrbifbccrh-160627_v1",

"_type": "apache_access",

"_id": "AVWPKMnATxyubhgJppgh",

"_score": null,

"_source": {

"request": "/cart/go",

"agent": "\"Mozilla/5.0 (compatible; Googlebot/2.1; +http://www.google.com/bot.html)\"",

"minor": "1",

"auth": "-",

"ident": "-",

"type": "apache_access",

"major": "2",

"clientip": "128.93.102.172",

"geoip": {

"timezone": "Europe/Paris",

"ip": "128.93.102.172",

"latitude": 46,

"country_code2": "FR",

"country_name": "France",

"country_code3": "FRA",

"continent_code": "EU",

"location": [

2,

46

],

"longitude": 2

},

"os": "Other",

"verb": "GET",

"message": "128.93.102.172 - - [27/Jun/2016:10:34:53 +0000] \"GET /cart/go HTTP/1.1\" 504 93 \"-\" \"Mozilla/5.0 (compatible; Googlebot/2.1; +http://www.google.com/bot.html)\"",

"tags": [

"_logz_upload_8021",

"apache-geoip"

],

"referrer": "\"-\"",

"@timestamp": "2016-06-27T10:34:53.000Z",

"response": 504,

"bytes": 93,

"name": "Googlebot",

"os_name": "Other",

"httpversion": "1.1",

"device": "Spider"

},

"fields": {

"@timestamp": [

1467023693000

]

},

"highlight": {

"type": [

"@kibana-highlighted-field@apache_access@/kibana-highlighted-field@"

]

},

"sort": [

1467023693000

]

}This is great, but how do we translate this information into something actionable?

Pinpointing the cause

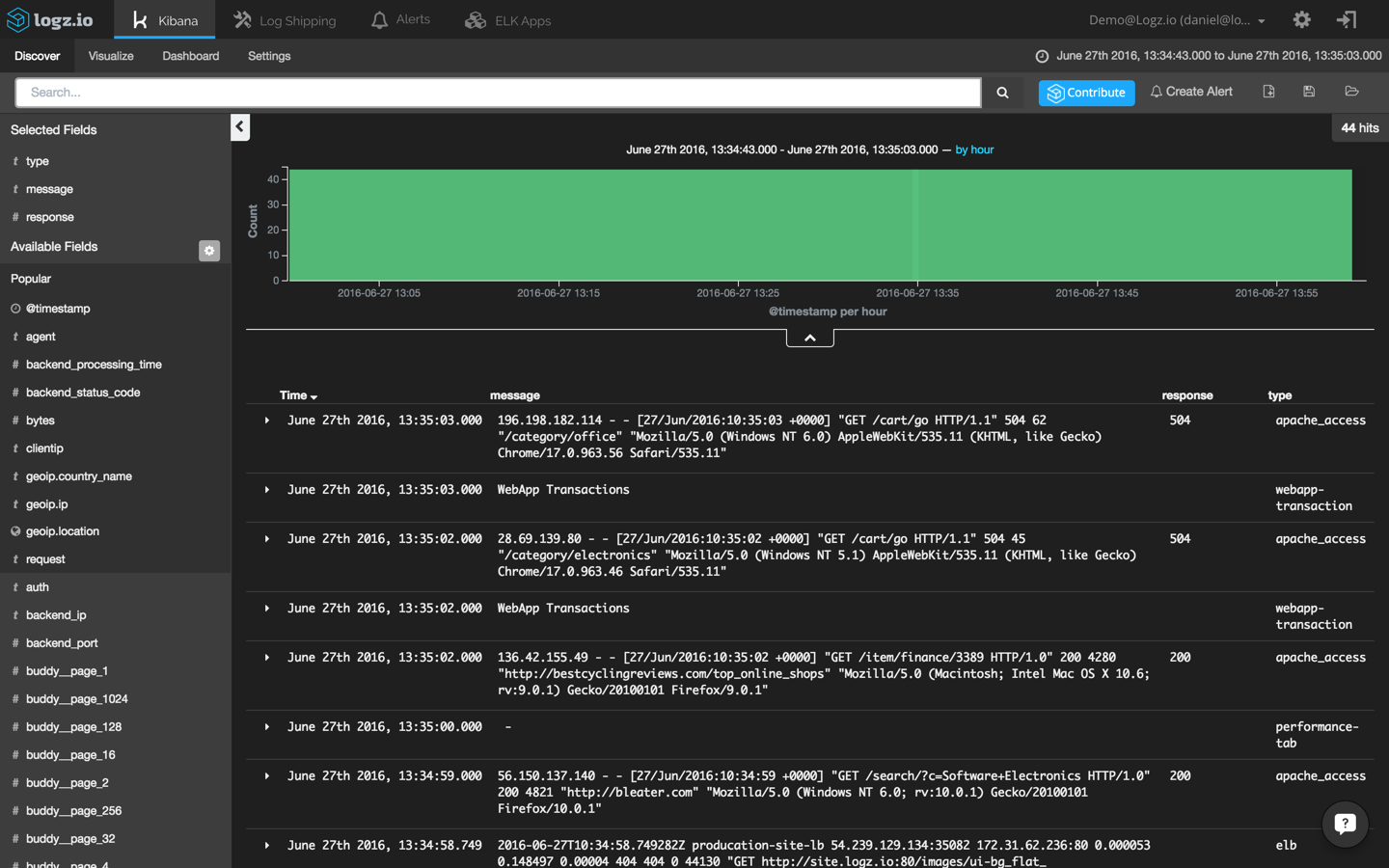

To get a wider perspective and try to understand whether there is a correlation between our Apache errors and the other events taking place in the environment, let’s “zoom out” while we are logged into ELK.

To do this, we’re going to use a useful feature that is specific to the Logz.io ELK stack — the +/- button that is in the top-right corner of each log message. This gives you the option of easily displaying other events that have taken place within one second, ten seconds, thirty seconds, or sixty seconds of the initial selected event. (If you’re using the open source version of Kibana, you can do the exact same thing by manually configuring the time frame.)

Clicking +/- ten seconds and removing the search filter we used to find the Apache 504 events gives us an aggregated view of all events coming within our entire system and taking place ten seconds before and after our 504 events:

What suddenly becomes visible is that Apache 504 responses are being logged right after WebApp Transaction errors. Opening one of these errors reveals that transactions are failing because of connection timeouts with our backend:

{

"_index": "logz-xmywsrkbovjddxljvcahcxsrbifbccrh-160627_v1",

"_type": "webapp-transaction",

"_id": "AVWPKMuFbofAsbciXwUI",

"_score": null,

"_source": {

"transaction_id": "25ce2a4f-3f3d-4666-9339-ca63597a8e30",

"latency": "403",

"return_message": "Transaction Failed - Connection Timeout",

"message": "WebApp Transactions",

"type": "webapp-transaction",

"tags": [

"_logz_http_bulk_json_8070"

],

"@timestamp": "2016-06-27T10:35:03.000+00:00",

"return_code": "617"

},

"fields": {

"@timestamp": [

1467023703000

]

},

"sort": [

1467023703000

]

}

Start the monitoring game

It’s time to pat ourselves on the back. We have successfully detected the anomaly, made a correlation between logs, and troubleshooted the root cause. The good news is that this is the hard part of the process. The bad news is that we are far from done.

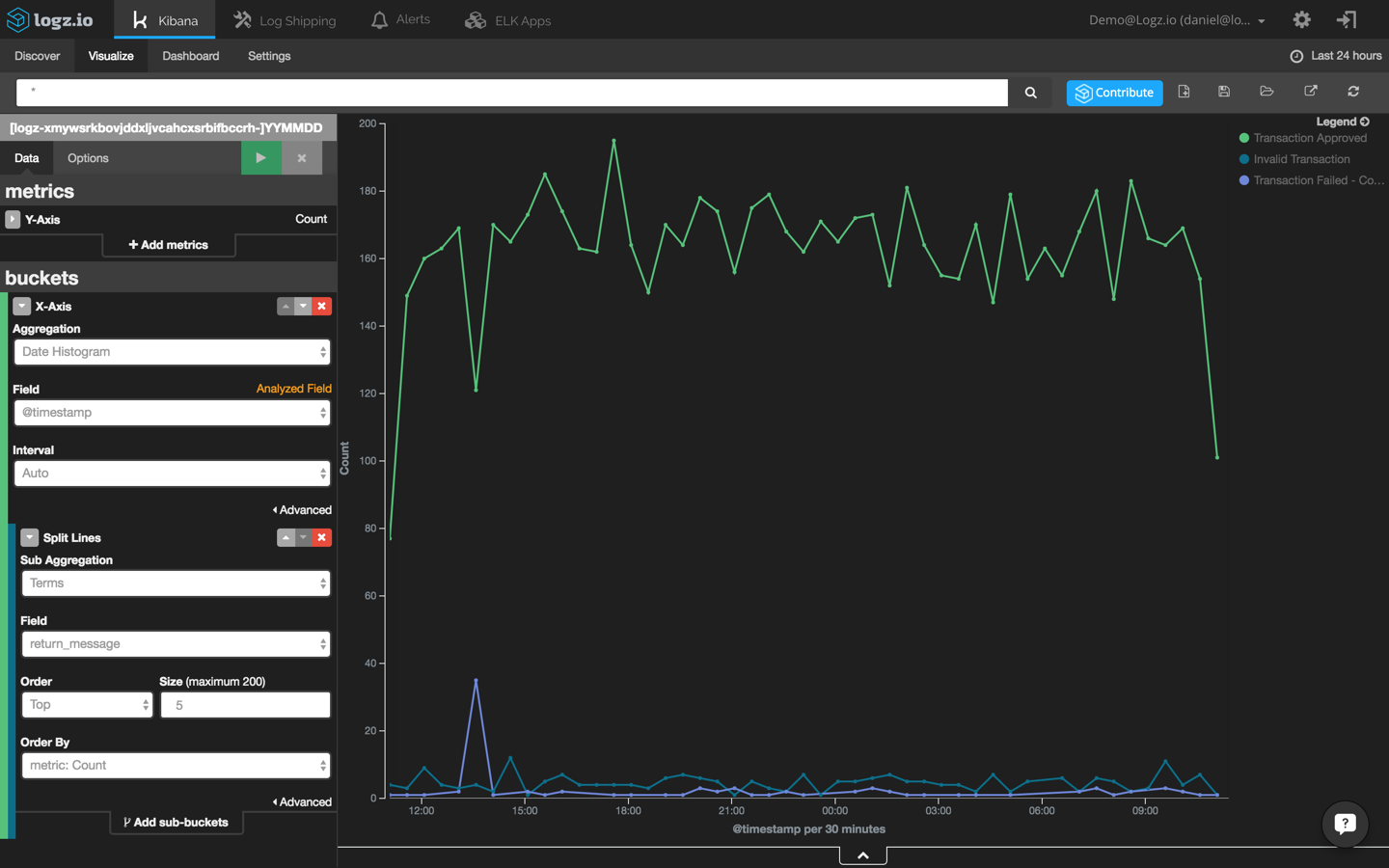

To keep tabs on these events, it’s now time to set up some monitoring graphs. In ELK lingo, these are the Kibana visualizations and dashboards.

First, we are going to create a new line chart that shows webapp transactions across time. To do this, we will use the ‘return_message’ field:

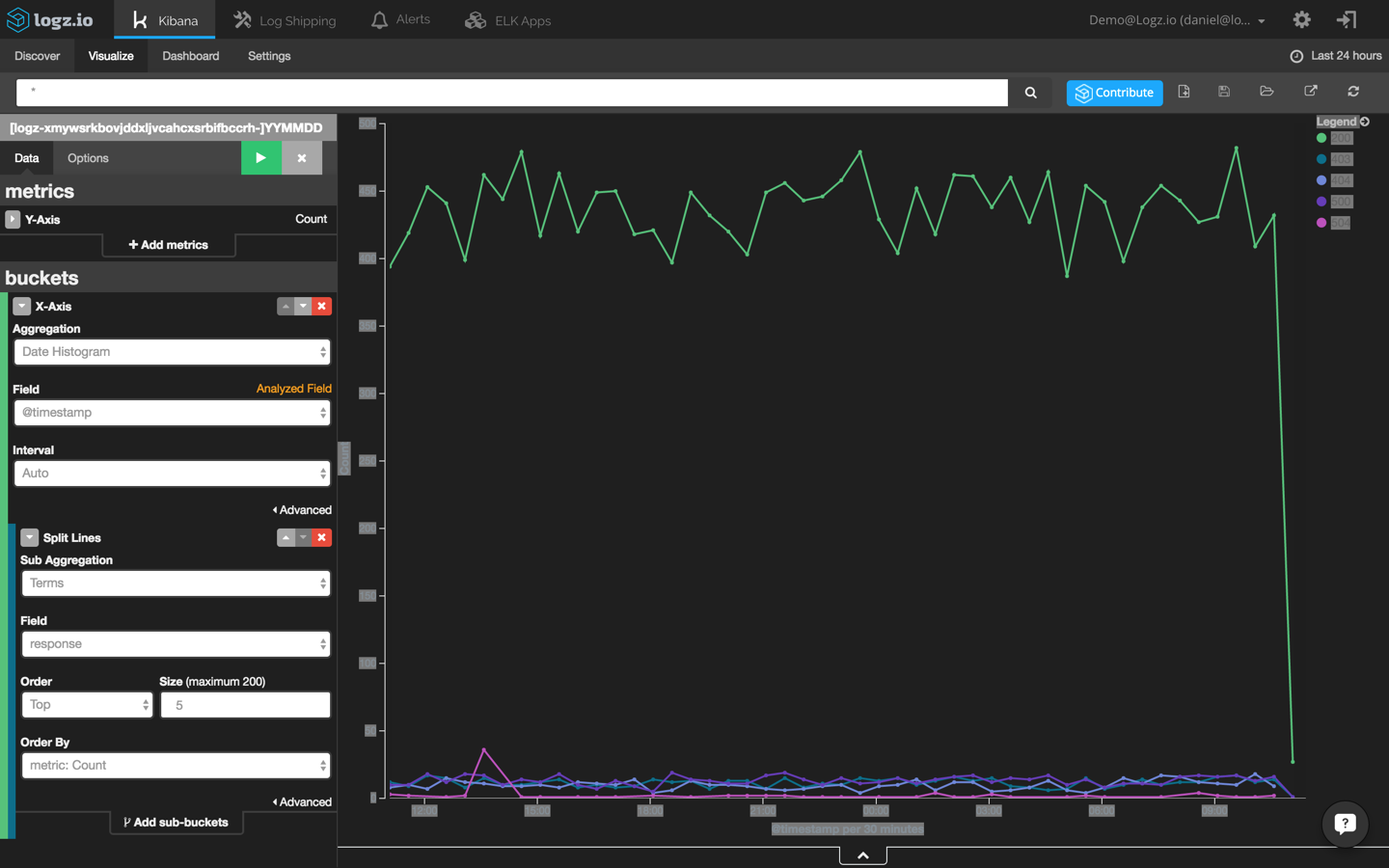

Next, we are going to create a new line chart that shows HTTP response transactions across time. To do this, will use the ‘response’ field:

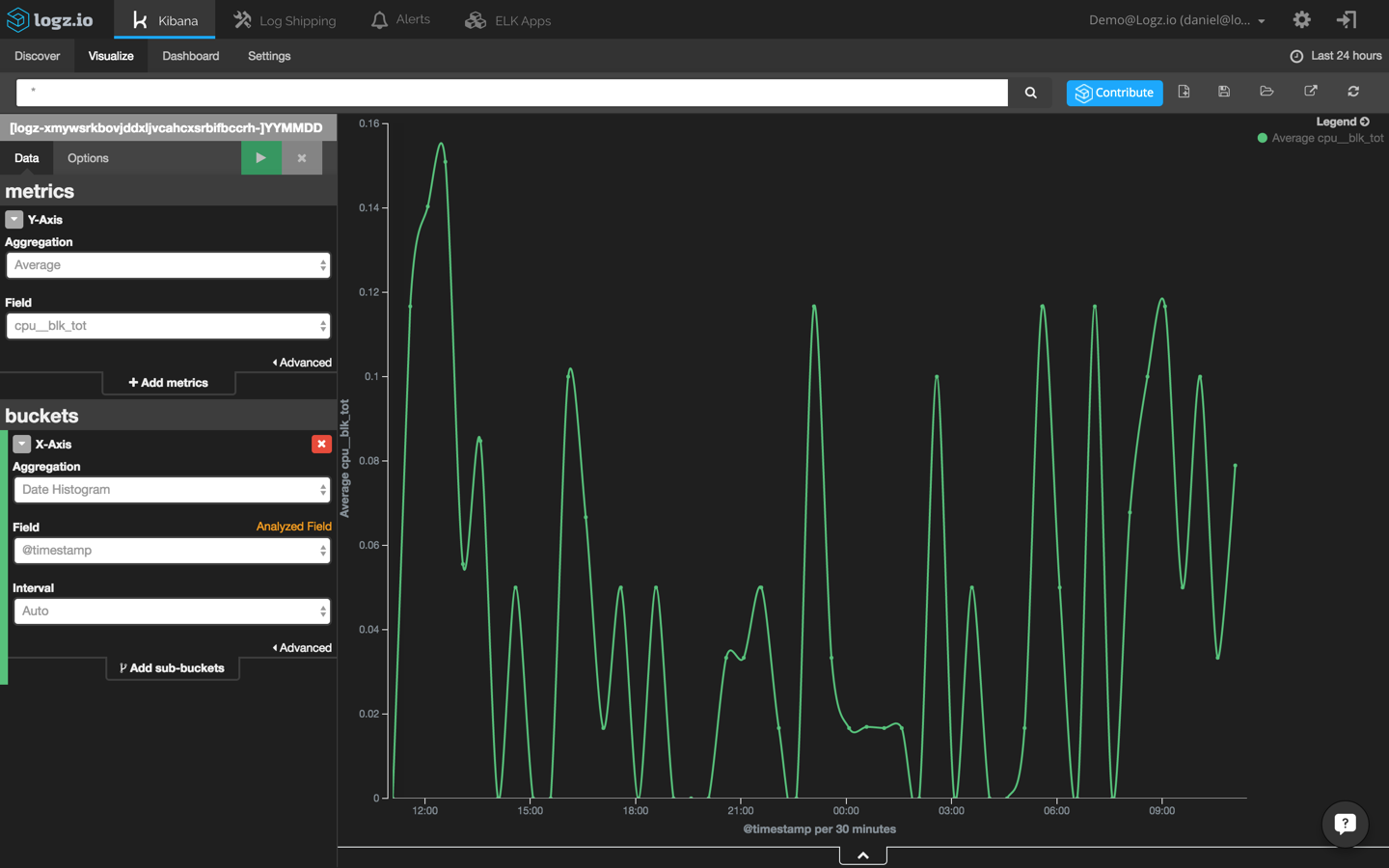

Last but not least, we are going to create another visualization that shows CPU usage across time using the ‘cpu_blk_tot’ field:

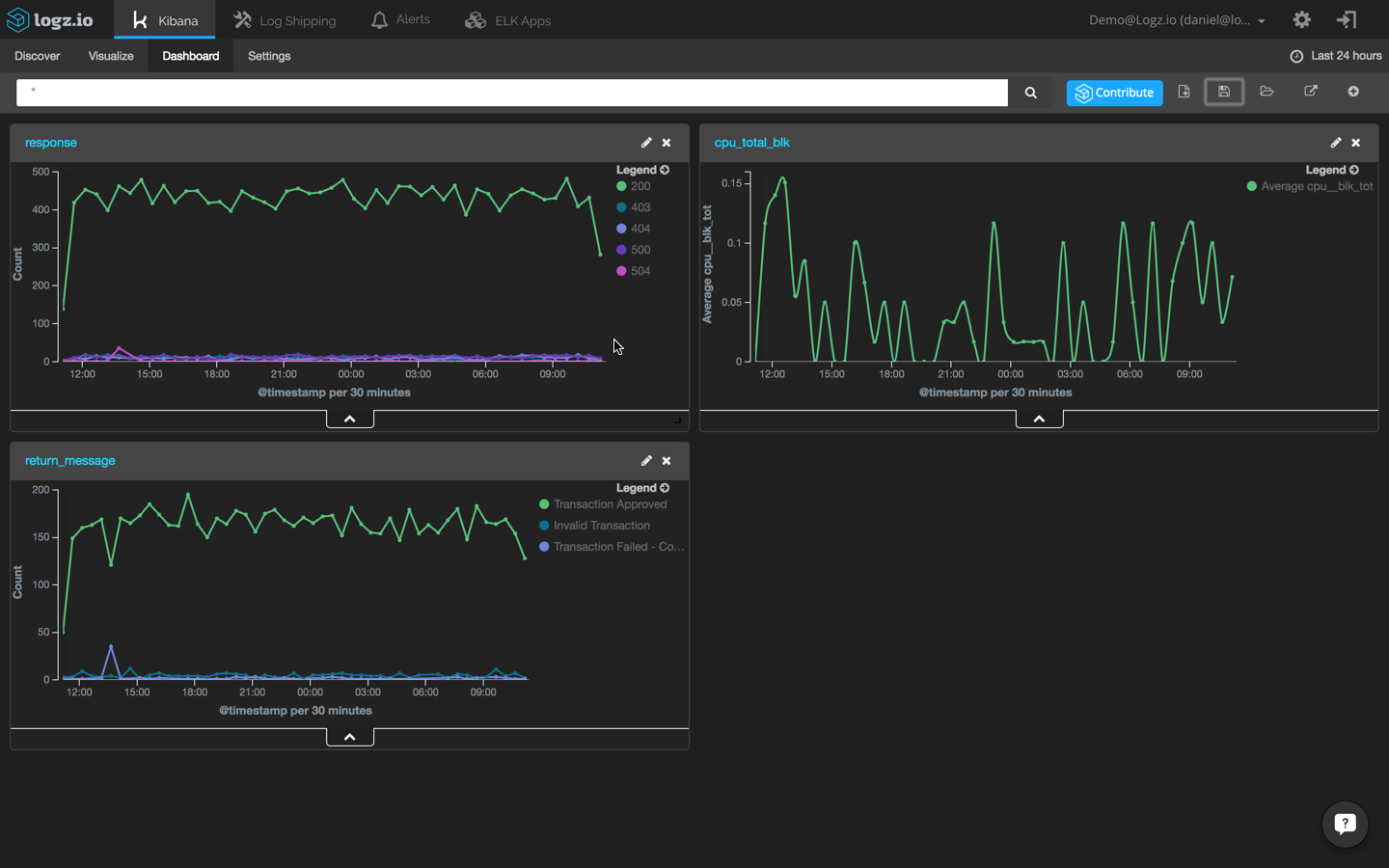

Add these three visualizations to a single dashboard to identify event correlations easily:

Of course, these are only a few examples of how to visualize log data. You can slice and dice the data any way you want, it all depends on which events you are monitoring and the set up of your environment.

Getting proactive

Now, how do we prevent such events from happening in the future?

There is usually no effective way for DevOps engineers to prevent website crashes that result from bad code that developers have written. However, there are ways for DevOps crews to be more proactive in their logging strategies so that they can find the causes of the crashes more quickly.

For example, creating alerts based on queries will alert you to events in real time. As an example, I will use the Logz.io alerting mechanism. If you’re using your own ELK Stack, you will need to configure your own alerting system.

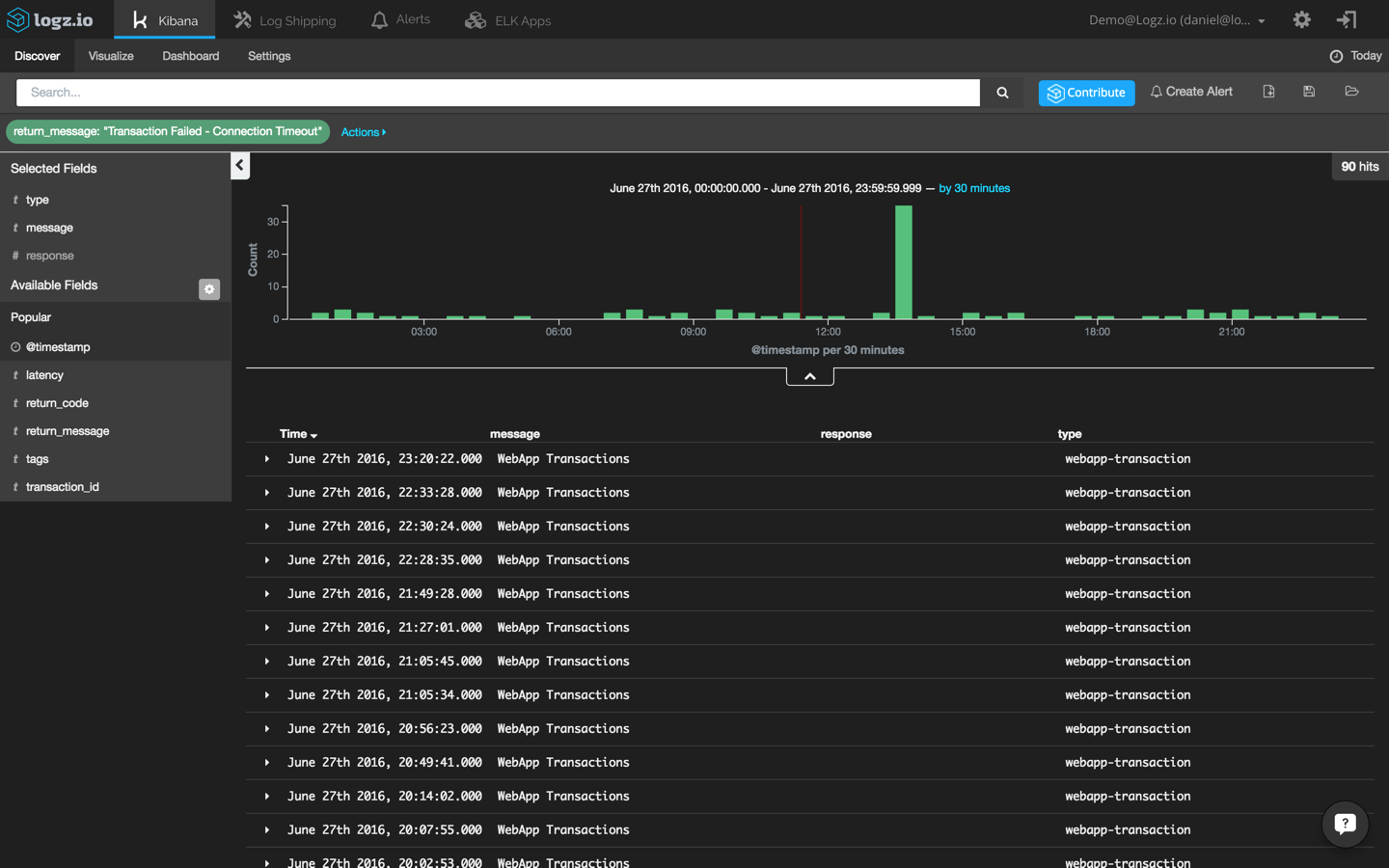

First, let’s filter the list of events displayed in Kibana to show only transaction errors. To do this, we’re going to click this magnifying icon adjacent to the ‘return_message’ field:

Also, we’re going to change the time frame to back to ‘today’ to get a wide perspective:

This helps us to identify what can be considered an “anomaly” in the context of transaction errors. We can see that up to three errors occur every thirty minutes. Also, we can see a peak of thirty-five events during our reported crash.

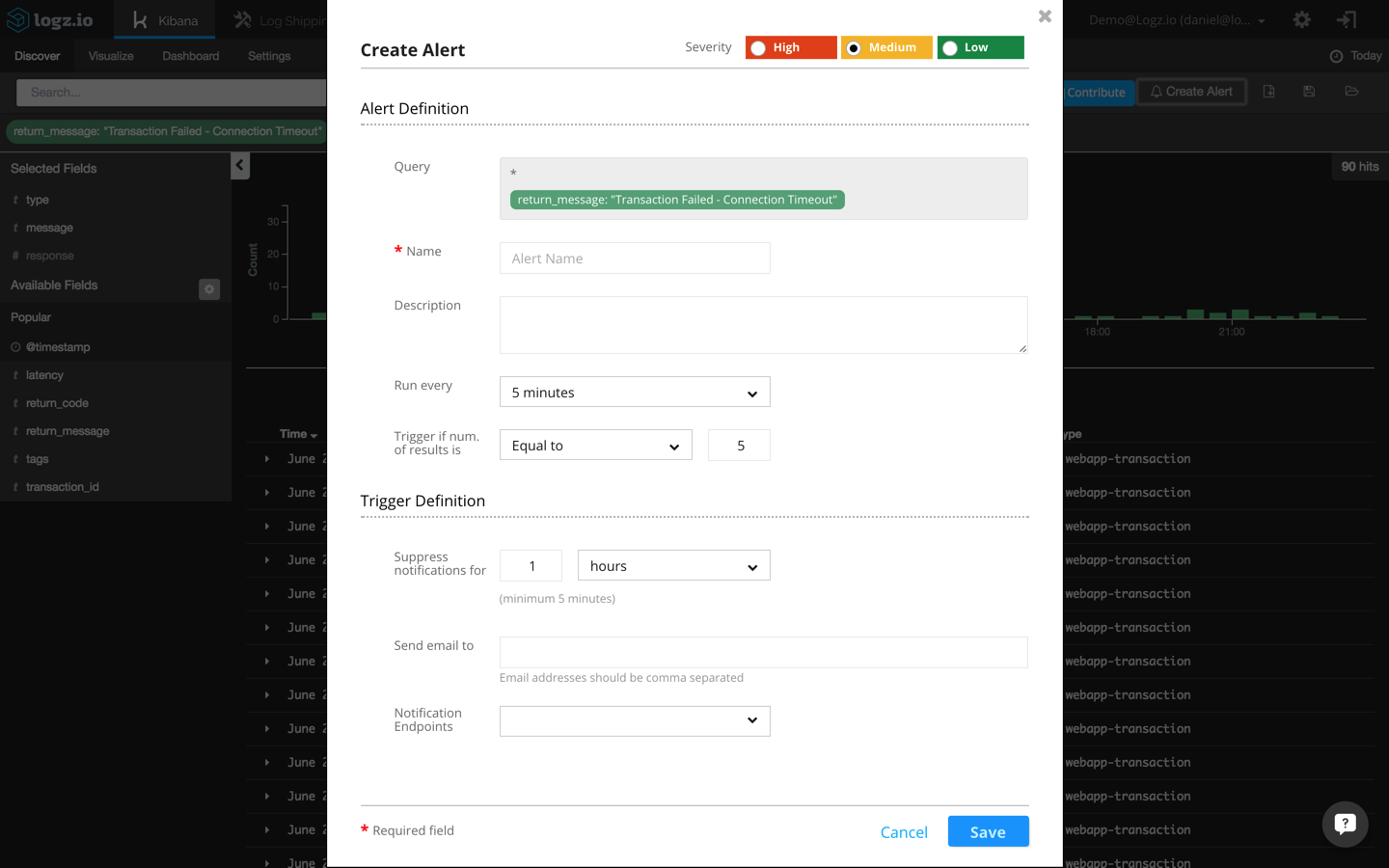

In the Kibana interface built into the Logz.io UI, I’m going to click the “Create Alert” button in the top right-hand corner:

The actual query to be monitored is automatically entered in the alert definition dialog:

return_message: "Transaction Failed - Connection Timeout"

Additional fields to be entered here are a name and description for the alert, a threshold configuration, and the trigger definition (how you want to be notified when an alert is triggered).

For the threshold in this example, I’m going to configure Logz.io to send me a notification whenever more than three events occur within a five-minute timeframe.

Last but not least, I’m going to configure the alert to send me the notifications over email. Other available options are to use webhooks to receive notifications via a messaging app such as HipChat or Slack.

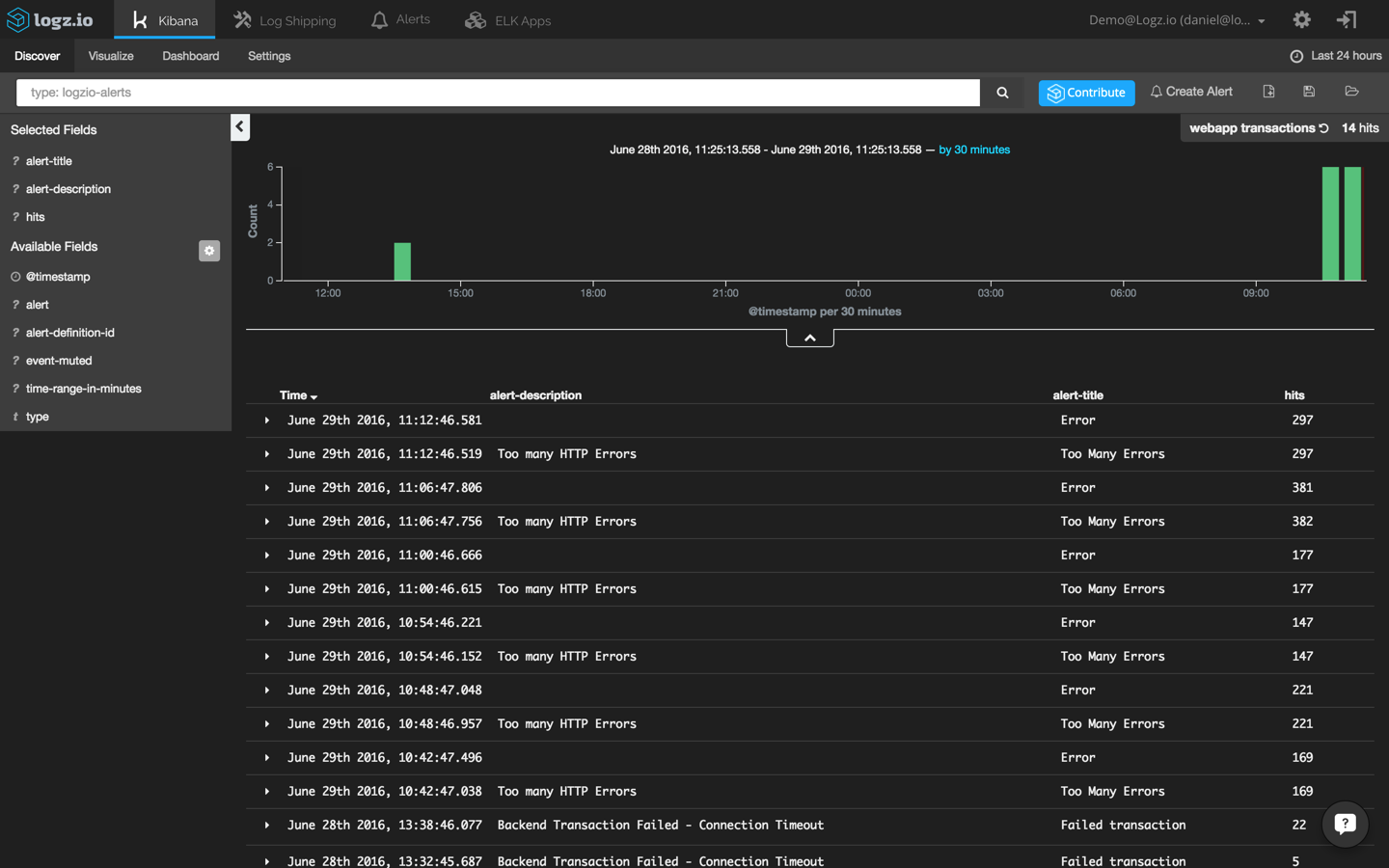

Once saved, alerts can be edited and managed from the “Alerts” tab. You can also create a visualization in Kibana that shows you the various Logz.io alerts that are being triggered.

To do this, I’m going to use the following query to search for alerts:

type: logzio-alerts

Next, I’m adding the ‘alert-description’ and ‘alert-title’ to get some visibility into the alerts being logged:

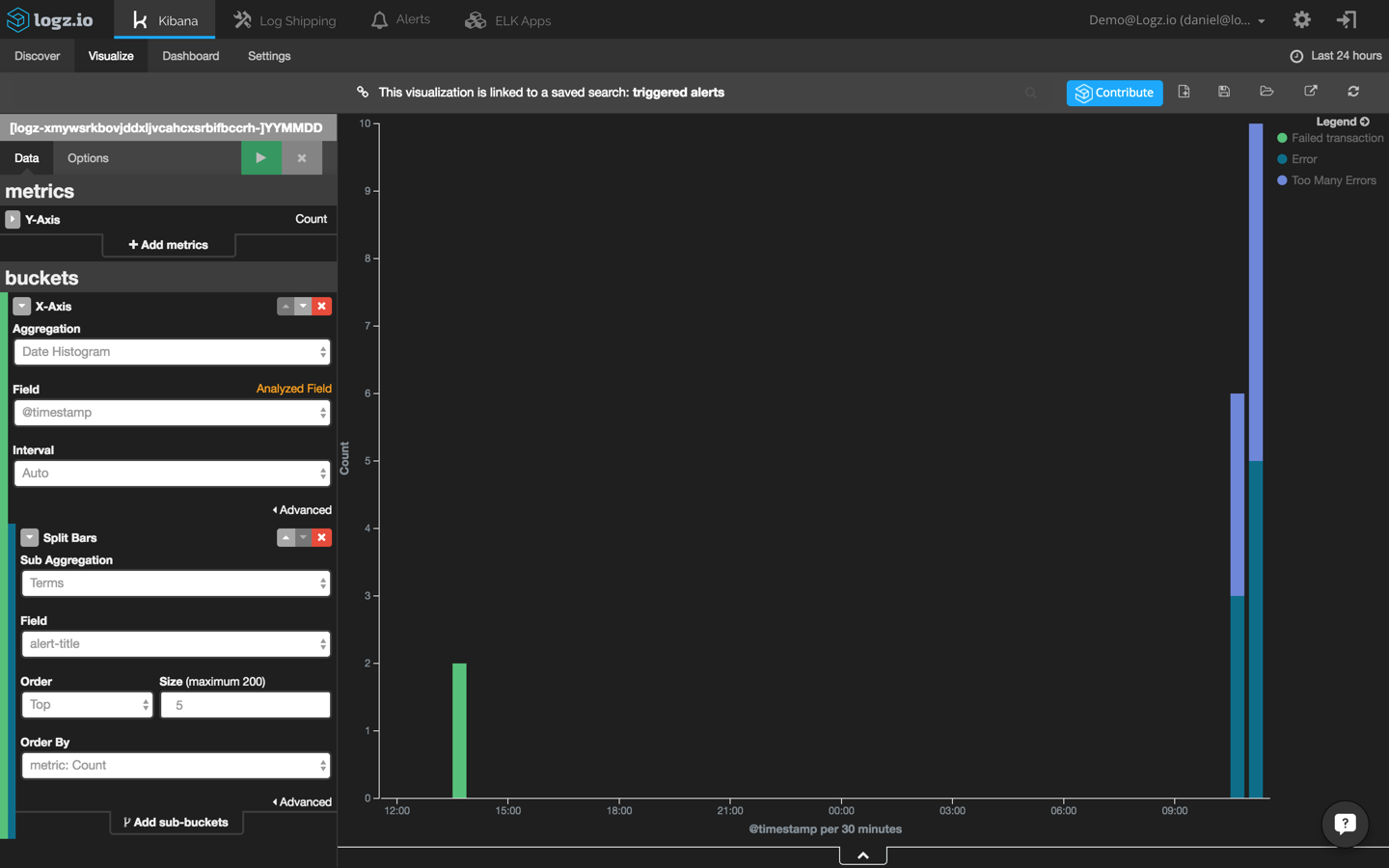

Using this search, I’m going to create a new bar chart visualization that shows me the different alerts that are being triggered in Logz.io over time:

A final note

Finding a book in a library without knowing the title and author name is impossible. In the field of log analytics, the ELK Stack makes this task easier with its powerful querying capabilities. Still — identifying anomalies within large sets of data remains a real obstacle for DevOps teams.

To solve this problem, our developers here at Logz.io are working hard on building machine-learning features on top of the ELK Stack that will use actionable insights to shorten the troubleshooting cycle.

Exciting news is coming. Stay tuned!

Logz.io is a predictive, cloud-based log management platform that is built on top of the open-source ELK Stack. Start your free trial today!

MongoDB Performance Monitoring Using The ELK Stack

MongoDB, one of the most popular NoSQL databases today, is designed to process and store massive amounts of data. The tool is used by many well-known, modern IT organizations such as Facebook, eBay, Foursquare, and Expedia. Monitoring is a critical component of all database administration, and tight monitoring of your MongoDB cluster will allow you to assess the state of your database. However, due to its complex architecture which allows for virtually unlimited scaling, monitoring is a challenging task.

In this article, we will explain how to collect and analyze some of the MongoDB metrics using the ELK Stack so that you can keep a close eye on your MongoDB performance and growth.

MongoDB Metrics to Track

In this article, we will use the latest version of MongoDB (version 3.2) and focus on metrics that are available using the WiredTiger storage engine. This is currently MongoDB 3.0’s default storage engine. We will focus on tracking and metric analysis to get an overview of its database performance, resource utilization, and saturation. These are accessible using MongoDB commands.

Throughput

MongoDB (with WiredTiger storage engine) provides several commands that can be used to collect metrics using mongo shell. Mongo shell is an interactive JavaScript interface for MongoDB that allows you to query data and take administrative actions.

One of the rich commands that provides a lot of information about items including operations, connections, journaling, background flushing, memory, locking, asserts, cursors, and cache is the serverStatus (i.e., db.serverStatus()).

These throughput metrics are important since they can be used to avoid many performance issues, such as resource overloading. To get a general overview of your MongoDB cluster activities, you should first look at number of read/write clients and the number of db operations that they perform. These metrics can be retrieved using serverStatus opcounters and globalLock objects.

The objects’ output is in JSON, such as show in the example below:

….

"opcounters": {

"insert": 0,

"query": 1,

"update": 12,

"delete": 5,

"getmore": 0,

"command": 23

}

….

The opcounters part of the serverStatus output

Opcounters.query and opcounters.getmore commands return metrics that indicate the number of read requests received from the time the mongod (a process that handles data requests and manages data access) instance last began. On the other hand, opcounters.insert, opcounters.update, and opcounters.delete return the number of write requests received.

By monitoring the number of read and write requests, you can quickly prevent resource saturation as well as spot bottlenecks and the root cause of overloads. In addition, these metrics will allow you to assess when and how you need to scale your cluster.

As shown above, globalLock is a document that reports on the database’s lock state and can provide you with information regarding read/write request statuses. These will allow you to check if requests are accumulating faster than they are being processed. The same applies to activeClients.readers and activeClients.writers. These can enable you to learn about the relationship between the amount of current active clients and your database load.

"globalLock": {

"totalTime": NumberLong(254415000),

"currentQueue": {

"total": 0,

"readers": 0,

"writers": 0

},

"activeClients": {

"total": 8,

"readers": 0,

"writers": 0

}

}

…

The globalLock part of the serverStatus output

Performance and Failover

Using a replica set (a master-slave replication that facilitates load balancing and failover) is a must to ensure your production robustness. The oplog (operations log) is the main component of the MongoDB replication mechanism. Below, you can see the relevant metrics that can be retrieved using the getReplicationInfo and replSetGetStatus commands.

As shown below, replica set member statuses are composed of a few indications such as the replica state and optimeDate field, which is important for calculating the replication lag metric that contains the date when the last entry from the oplog is applied to that member):

...

"members" : [

{

"_id" : 0,

"name" : "<HOST1>",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 250,

"optime" : {

"ts" : Timestamp(1447946570, 1),

"t" : NumberLong(1)

},

"optimeDate" : <DATE>,

"infoMessage" : "could not find member to sync from",

"electionTime" : <TIMESTAMP>,

"electionDate" : <DATE>,

"configVersion" : 1,

"self" : true

},

{

"_id" : 1,

"name" : "<HOST2>",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 13,

"optime" : {

"ts" : Timestamp(1447946549, 1),

"t" : NumberLong(-1)

},

"optimeDate" : <DATE>,

"lastHeartbeat" : <DATE>,

"lastHeartbeatRecv" : <DATE>,

"pingMs" : NumberLong(0),

"configVersion" : 1

},

{

"_id" : 2,

"name" : "<HOST2>",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 13,

"optime" : {

"ts" : Timestamp(1447946549, 1),

"t" : NumberLong(-1)

},

"optimeDate" : <DATE>,

"lastHeartbeat" : <DATE>,

"lastHeartbeatRecv" : <DATE>,

"pingMs" : NumberLong(0),

"configVersion" : 1

}

]

…

The member part of the replSetGetStatus output

Replication lag is used to show the difference between the primary and secondary. Since you want to avoid serving outdated information, it’s important to keep the difference between the two as narrow as possible. If you lack any existing load issues, your replication lag will be zero. This is ideal. However, if the number rises for your secondary nodes, the integrity of your data is at risk. To avoid such events, we recommend setting alerts on these metrics so that you can constantly monitor your replica status. Learn more about replication lag here.

Resource utilization

One of the most important metrics is the number of client connections. This includes current active connected clients and the unused connections as well. These can be reported using serverStatus:

...

"connections": {

"current": 1,

"available": 818,

"totalCreated": NumberLong(4)

}

...

The connections part of the serverStatus output

An unexpected rise in the client connections metric can occur if the connection is not handled well or if there is an issue inside of the MongoDB driver that is used for handling the connection. Tracking the behavior of these metrics will allow you to set the relevant summary metrics such as the average amount as alerts’ thresholds.

Another set of very important metrics is related to storage. These can be be retrieved using the db.stats() command, which will return statistics for the selected database. Running it using the Mongo shell to get statistics on the database test_mongo_db looks like this:

mongo test_mongo_db --eval "db.stats()"

The next JSON snippet is from the db.stats output:

{

"db": "test",

"collections": 1,

"objects": 1,

"avgObjSize": 40,

"dataSize": 40,

"storageSize": 4096,

"numExtents": 0,

"indexes": 1,

"indexSize": 4096,

"fileSize": 0,

"ok": 1

}

Example of db.stats output

If you look inside the output of db.stats command, you will find (similar to the example above) metrics for the number of objects (documents) within all of the collections (collections property in the output), the size of all documents (dataSize property in bytes), the size of all indexes (indexSize property, in bytes) and total amount of space allocated to collections in this database for document storage.

Monitoring dataSize, indexSize or storageSize metrics will show you the change in physical memory allocation and will help you to keep your cluster healthy with enough storage to serve your database. On the other hand, a large drop in dataSize can also indicate that there are many requested deletions, which should be investigated to confirm that they are legitimate operations.

The following metrics that should be monitored are the memory metrics using serverStatus. The interested tuple of metrics is virtual memory usage, which is located in the mem.virtual property (in MB), and the amount of memory used by the database, which is located in the mem.resident property (in MB). Similar to the storage metrics, memory metrics are important to monitor because overloading RAM memory within your server(s) is never good. This can lead to the slowing or crashing of your server, which will leave your cluster weakened. Or, even worse, if you have only one dedicated server, MongoDB can dramatically slow down or even crash.

Another important set of metrics is located in the extra_info.page_faults property of the serverStatus output: the number of page faults or the number of times MongoDB failed to get data from the disk.

"mem": {

"bits": 64,

"resident": 82,

"virtual": 245,

"supported": true,

"mapped": 0,

"mappedWithJournal": 0

}"extra_info": {

"note": "fields vary by platform",

"heap_usage_bytes": 59710000,

"page_faults": 1

}

The mem and extra_info part of the serverStatus output

Collecting and Monitoring Using ELK

In this section, we will described how to ship, store, and monitor your MongoDB performance metrics detailed above using the Logz.io ELK Stack.

We will use the Ubuntu Server 16.04 on Amazon cloud. You can also read our step-by-step article if you would like to know how to install and configure the ELK stack on Amazon cloud.

Extracting the MongoDB Metrics

In the next step, we will demonstrate how to ship metrics to Elasticsearch with Logstash. Using some programming to retrieve metrics will give you better control and allow you to run complex pre-shipping actions.

To ship logs, we will create a Logstash configuration file with the input path, including how to interpret it and where to send it. Learn more about Logstash configuration here.

Before we create the Logstash configuration file, we will describe how to retrieve the MongoDB metrics specifically — using the mongo shell interface via the bash of your OS.

If we want to execute the serverStatus command via our terminal, without staying in the mongo shell program, we can use –eval flag of the mongo shell program as follows:

mongo --eval "db.serverStatus()"

And the output:

MongoDB shell version: 3.2.7

Connection to: <db>

{

<the JSON objects>

}

The output format from the serverStatus command

As you can see, the first two lines of the output contain information about the MongoDB shell version and to which database the shell is currently connected. Since this format does not comply with strict JSON rules and complicates our Logstash configuration file, we will use the pipeline approach to cut off the first two lines of the output with the tail command.

So, our command will look like this:

mongo --eval 'db.serverStatus()' | tail -n +3

Now, the output file will only contain the JSON part.

Next, we want to remove the NumberLong(x) and ISODate(x) from the JSON file. Again, sending these to Logstash will trigger a JSON parsing exception, and storing in Elasticsearch will fail. To transform the stream of the text, we will use the sed command with a regex pattern that will find NumberLong and ISODate data types. It will then replace it with the arguments that exist inside these data types:

{

….

"localTime": ISODate("2016-06-23T16:43:19.105Z"),

…

"connections": {

….

"totalCreated": NumberLong(62)

…

}

}

The example of the serverStatus ouput with NumberLong and ISODate data types

Now, using the pipeline command and adding the piece for transforming the text, the final command will look as follows:

mongo --eval 'db.serverStatus()' | tail -n +3 | sed 's/\(NumberLong([[:punct:]]\?\)\([[:digit:]]*\)\([[:punct:]]\?)\)/\2/' | sed 's/\(ISODate(\)\(.*\)\()\)/\2/'

Running this command will generate a pure JSON file without the MongoDB metadata.

In addition to the serverStatus command, we will also use the db.stats() command to gather storage metrics for specific databases. For the purpose of this tutorial, we created two databases for which we want to monitor storage allocation with the names test_mongo_db_1 and test_mongo_db_2.

Again, we will use the commands for gathering storage statistics for these two databases together with pipeline and tail commands to comply with the JSON formatting rules:

mongo test_mongo_db_1 --eval "db.stats()" | tail -n +3 mongo test_mongo_db_2 --eval "db.stats()" | tail -n +3

Configuring Logstash

Next, we will take the created commands from above and place them in the Logstash configuration file (logstash.config) using the exec input plugin. To forward the data to Elasticsearch, we will use the Elasticsearch output plugin:

input {

exec {

command => "mongo --eval 'db.serverStatus()' | tail -n +3 | sed 's/\(NumberLong([[:punct:]]\?\)\([[:digit:]]*\)\([[:punct:]]\?)\)/\2/' | sed 's/\(ISODate(\)\(.*\)\()\)/\2/'"

interval => 7

type => "db.serverStatus"

}

exec {

command => "mongo test_mongo_db_1 --eval 'db.stats()' | tail -n +3"

interval => 7

type => "db.test_mongo_db_1.stats"

}

exec {

command => "mongo test_mongo_db_2 --eval 'db.stats()' | tail -n +3"

interval => 7

type => "db.test_mongo_db_2.stats"

}

}

filter {

json {

source => "message"

}

}

output {

elasticsearch {

hosts => ["localhost:9200"]

}

}

The Logstash configuration for getting MongoDB metrics and sending it to the Elasticsearch

We’re now going to start the Logstash configuration using the next command:

./bin/logstash -f logstash.config

After a short while, you will begin to receive the first MongoDB metrics via Logstash.

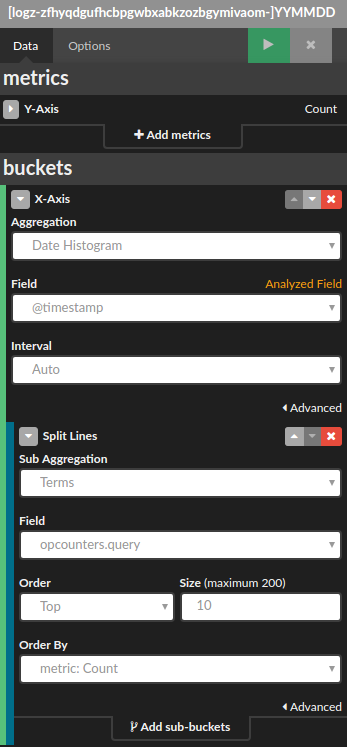

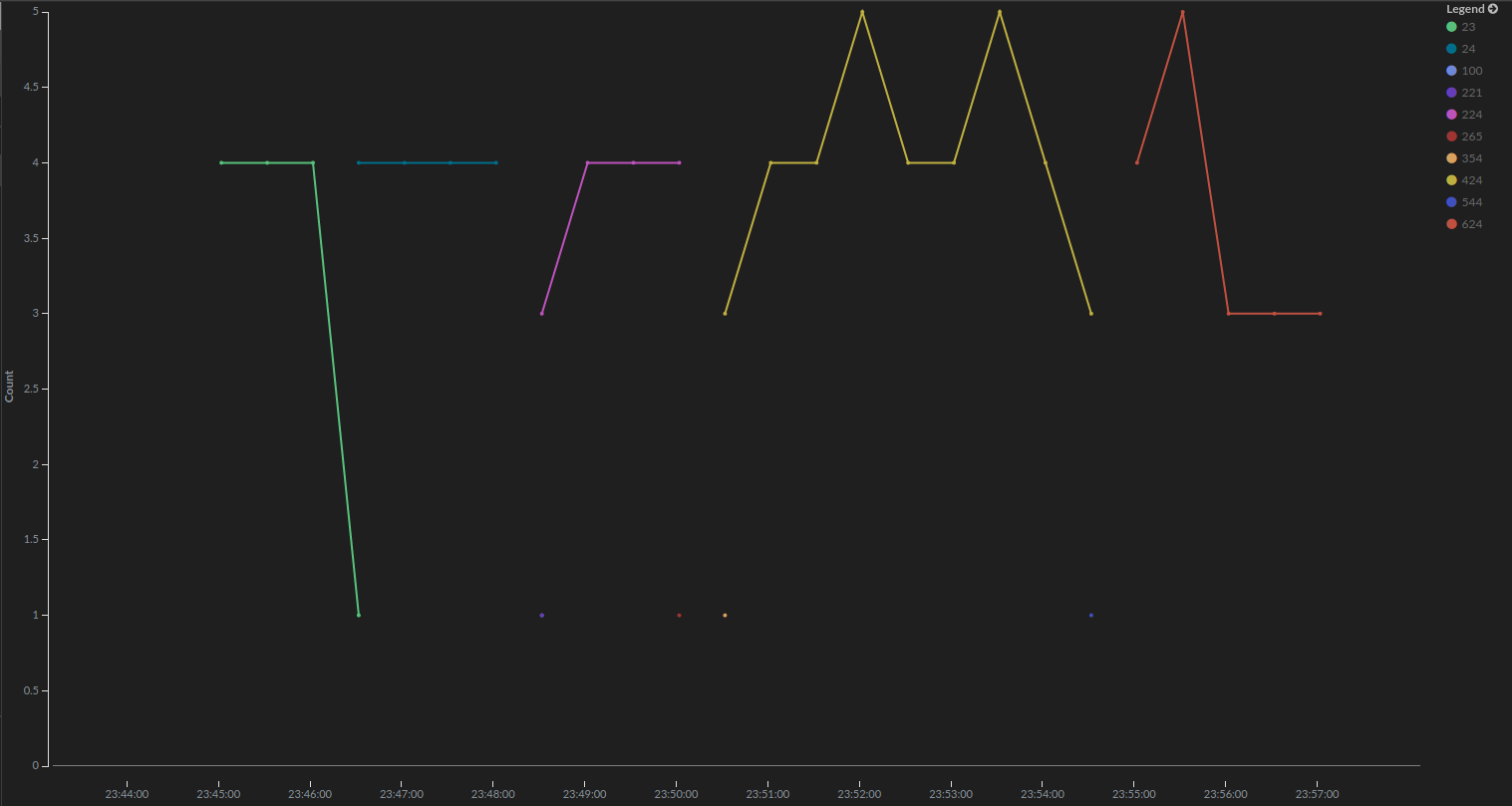

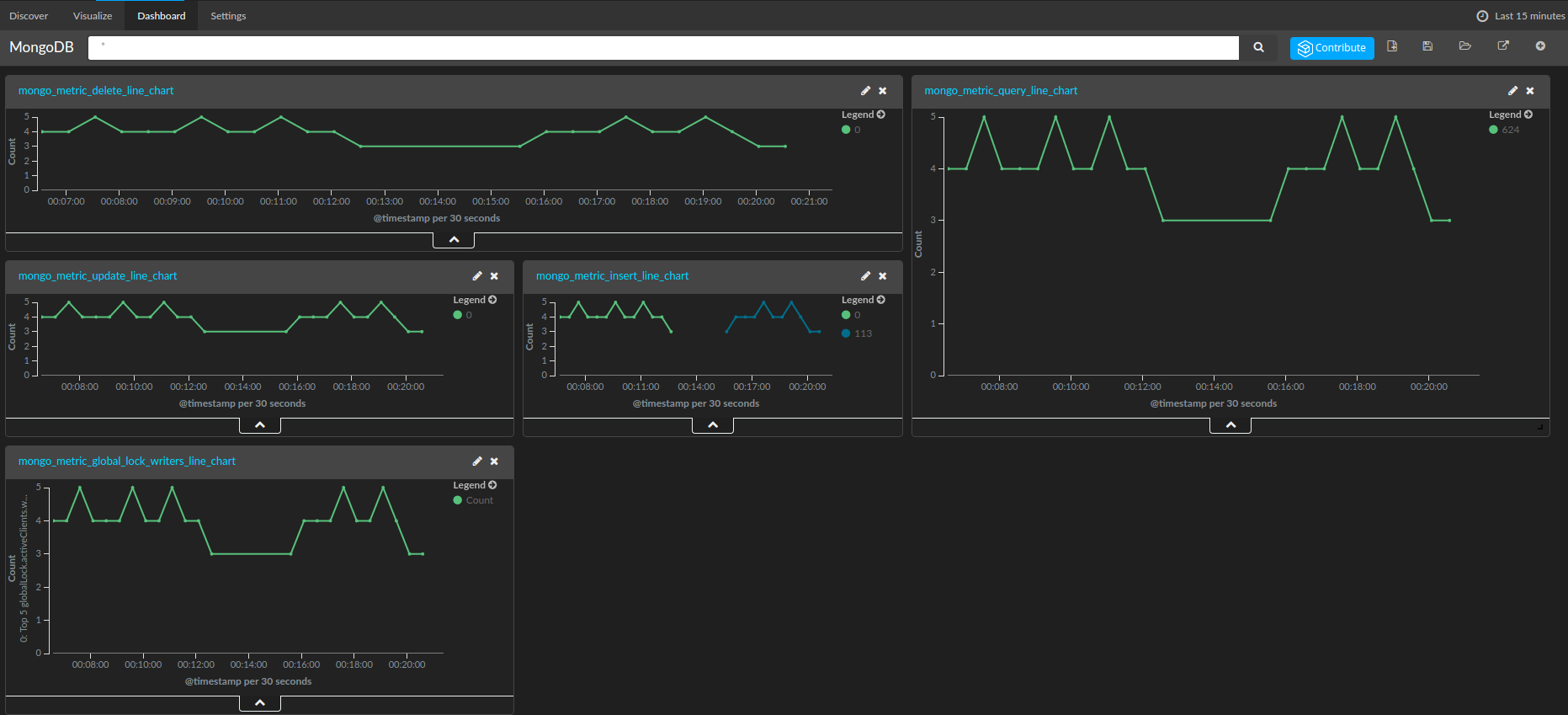

Discover section of Kibana after short time of waiting until Logstash start with sending metrics to the Elasticsearch

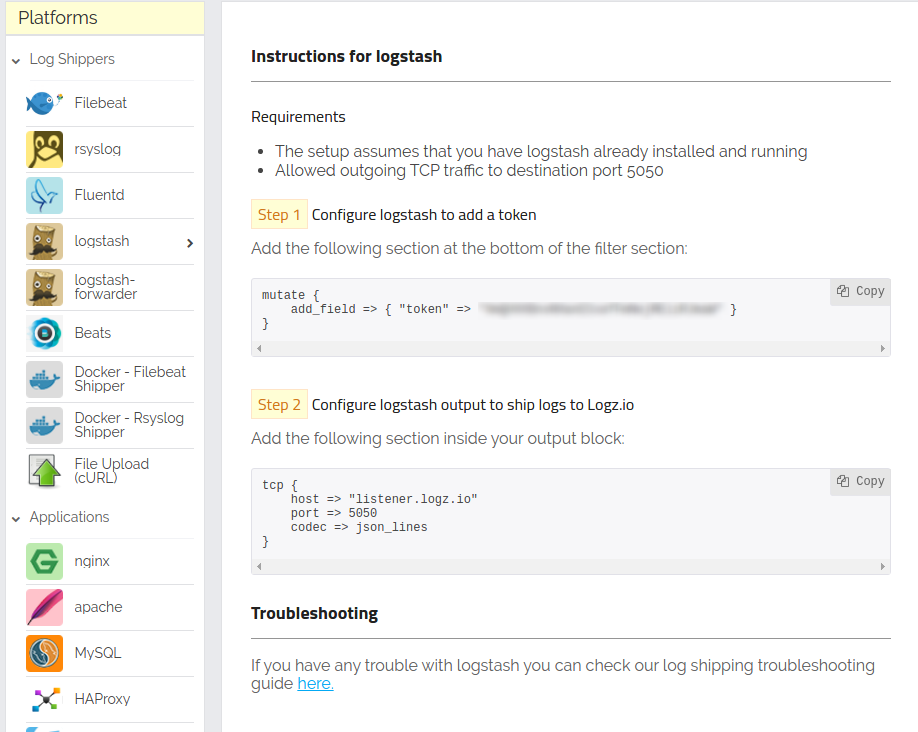

Shipping to Logz.io Using Logstash

Logz.io provides the ELK Stack as an end-to-end service so that the logs that you send to us are indexed and stored in Elasticsearch and available in real-time through Kibana.